Augment Code: Context Is the New Compiler

This post is part of our ERC 2025 Recap series. Read the full recap post here.

At the 2025 Enterprise Ready Conference, the lights dimmed, and a terminal window filled the main screen. Chris Kelly from Augment Code took the stage—not with slides, but with a live CLI session.

His demo stood out not for flashy visuals, but for a clear argument about what’s been missing from the current wave of AI-assisted coding tools: context.

Why AI Coding Still Feels Junior

Chris began by posing a question every engineer in the audience had felt: why has AI-assisted programming been harder to adopt than other shifts in our field? We eagerly embraced new languages, frameworks, and paradigms. Yet, even as AI assistants grow more powerful, developers often find them brittle—good at syntax, weak at understanding intent.

A seasoned engineer doesn’t just write code; they recall patterns, reference internal libraries, and respect constraints learned over years of debugging. That hard-won context is what separates senior engineering from simple code generation. When we ask AI to “just write it,” we’re asking it to act without that grounding.

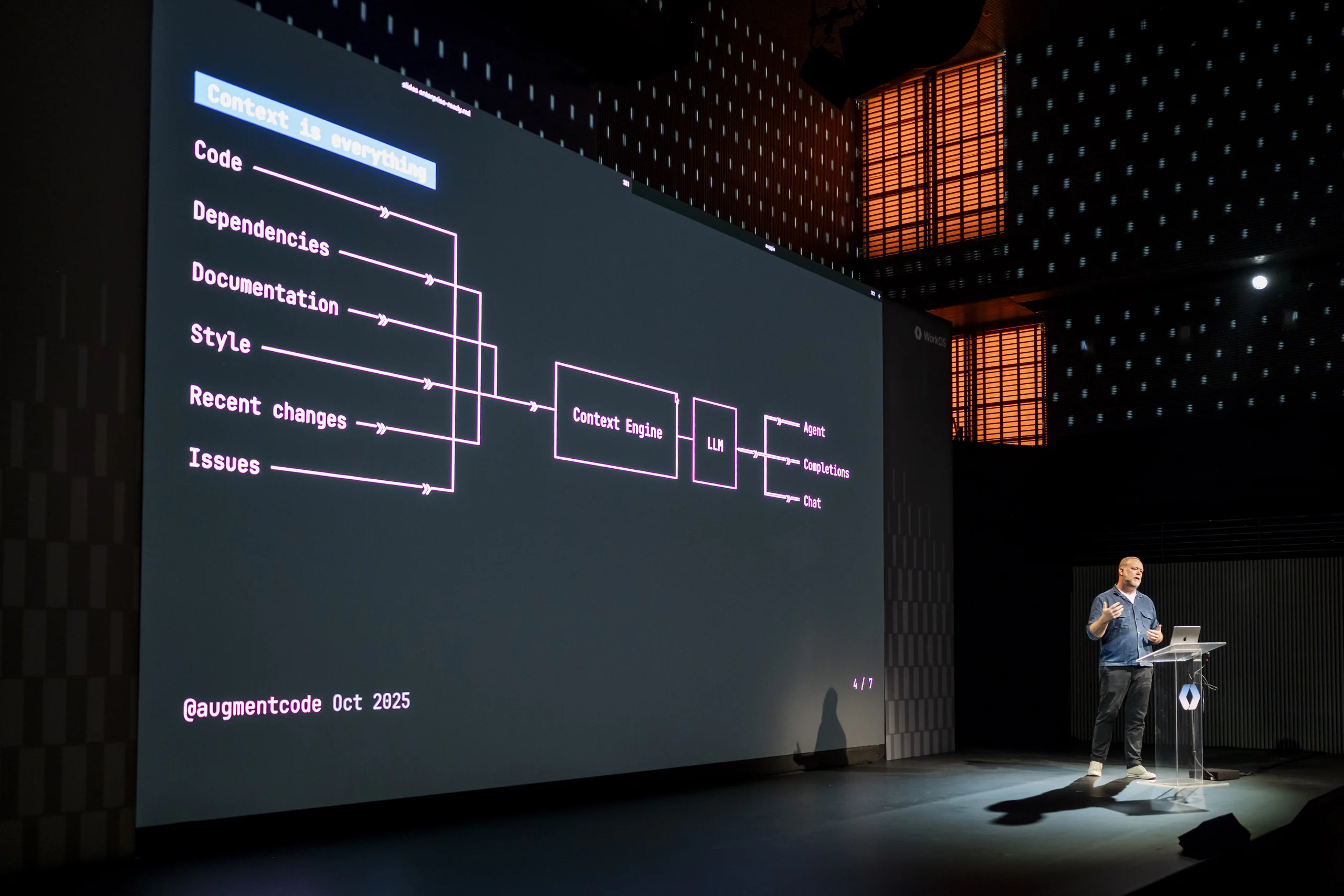

Augment’s Answer: Context Engines

That gap is exactly what Augment Code set out to solve. Chris introduced Augment’s “context engine,” a system that deeply indexes your codebase—not just by tokens or grep-like matches, but semantically. The engine maps relationships and patterns across a project, using vector search to understand how your code actually behaves.

When Chris kicked off a new feature in Augment’s CLI, the system automatically enriched his prompt with relevant details from the existing code. Instead of starting from scratch, Augment’s agent pulled in established patterns, libraries, and even internal Git utilities—choosing reuse over reinvention.

The result is an AI that behaves more like a senior teammate: not just producing valid code, but producing code that fits.

The Live Demo

Chris’s terminal session centered around a seemingly simple task: customizing a status bar to include the current Git branch. He showed how Augment’s “prompt enhancer” turned a short request into a context-rich query, referencing relevant modules and dependencies automatically.

While the process ran, Chris contrasted Augment’s approach with more common AI tools. In a test using another assistant, the same feature was built by shelling out to git in a new process—functional but isolated.

Augment, by contrast, detected that a reusable Git library already existed within the company’s codebase and built on top of it.

“Good code,” he reminded the audience, “is often no new code at all.”

Beyond Code Generation

Augment Code isn’t trying to be another autocomplete tool—it’s trying to encode judgment. Its vectorized index acts as an institutional memory for large teams, surfacing prior art and avoiding duplication. The promise is subtle but powerful: AI that learns your organization’s idioms, your team’s patterns, and your project’s scars.

As Chris wrapped up, the crowd understood what made Augment’s approach different. This wasn’t about getting code written faster—it was about making AI a real part of the software development lifecycle.

Try It Yourself

Augment Code offers a CLI, as well as extensions for VS Code and JetBrains IDEs. For teams maintaining large or regulated codebases, its ability to blend retrieval, reasoning, and reuse makes it an intriguing evolution in AI-assisted development.

At ERC 2025, Augment Code reminded the audience that the next frontier in developer tools isn’t generation—it’s understanding. Context, not completion, is what makes code enterprise-ready.

Read our full ERC 2025 Recap post here.