Best practices for CLI authentication: A technical guide

Learn how to securely authenticate users accessing your service through a command-line tool, enabling safe, scriptable workflows across terminals, machines, and Docker containers.

.webp)

Your company provides a service, and your customers want to access it via a command-line tool so they can freely script and automate their desired workflows.

How do you securely authenticate a user running commands across multiple terminals, machines, and Docker containers?

The right solution depends heavily on context—what's sufficient for a developer's workflow could be a critical security gap in an enterprise setting.

This guide explores common CLI authentication patterns used by tools like GitHub CLI and AWS CLI, focusing on how to:

- Securely obtain credentials (e.g., access tokens) from an API or Authorization server

- Manage and store these credentials for seamless reuse across requests

We'll also cover best practices for avoiding common vulnerabilities and highlight strategies for balancing security and usability across containerized environments, CI/CD pipelines, and local development setups.

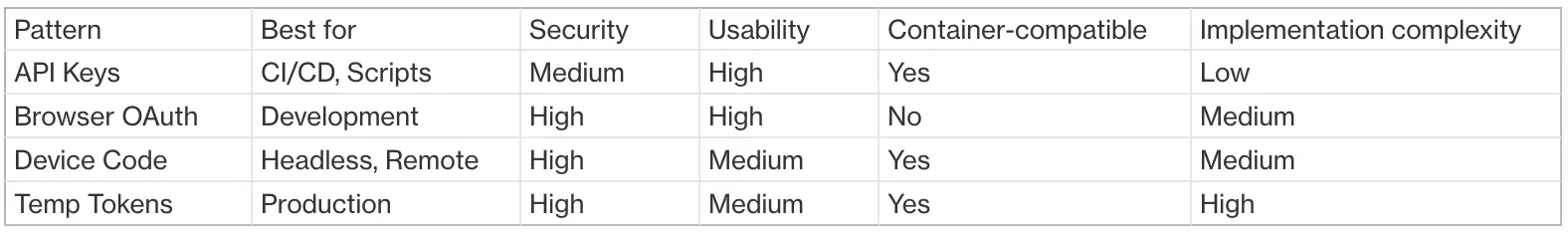

Common CLI authentication patterns

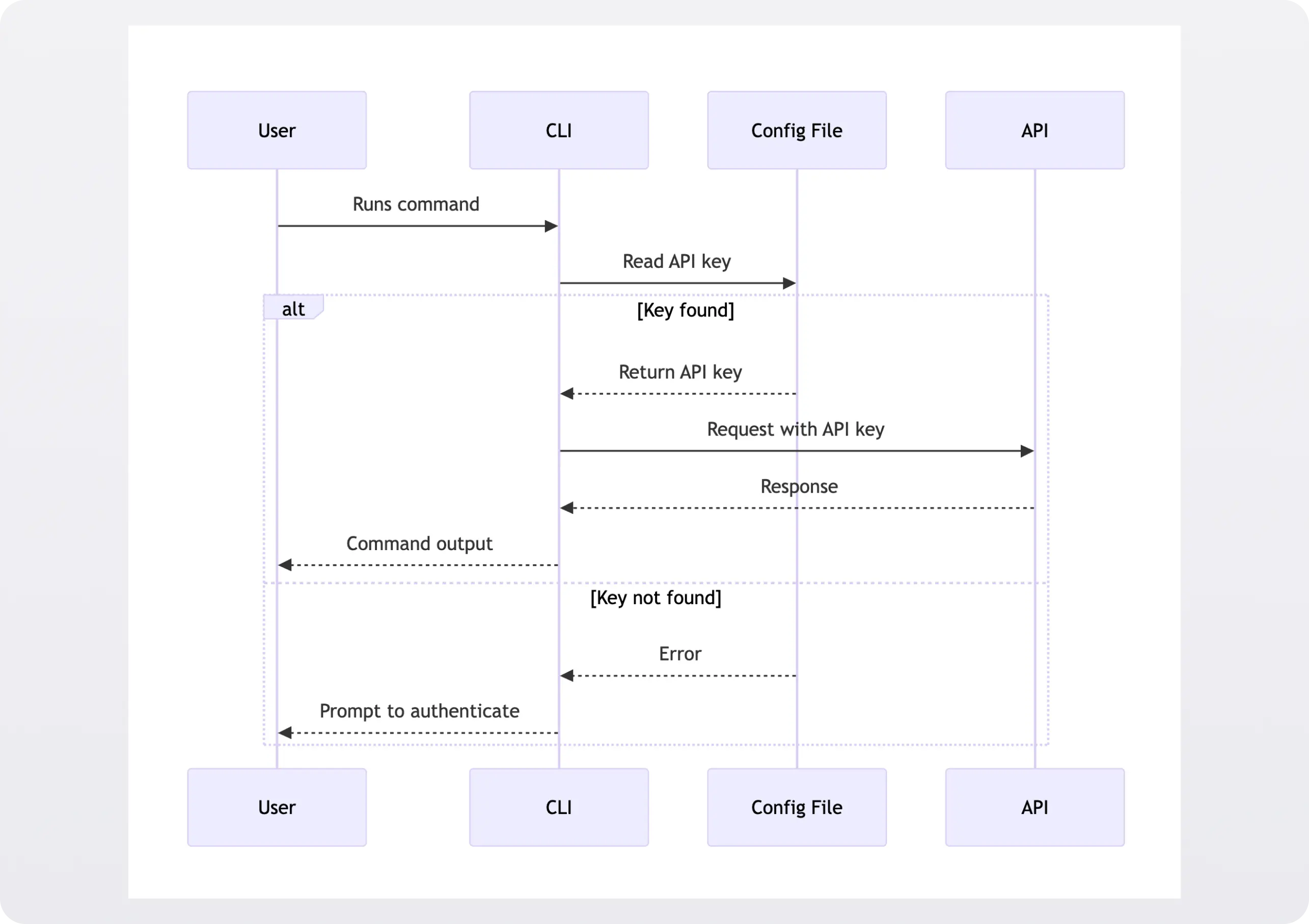

1. API keys and credentials files

The most straightforward approach is having users hardcode an API key or credentials in a configuration file.Real-world examples:

- Stripe's CLI uses API keys stored in

~/.stripe/config.toml - AWS CLI uses a credentials file located at

~/.aws/credentialswhich looks like this:

The command line tool reads the secret from the local configuration file at runtime. It presents it as an authentication token, often a bearer token in an HTTP request header to the CLI's backend API:

The same thing that makes this approach appealing from a usability perspective—the token is just sitting in a flat file in plaintext—makes it problematic from a security perspective.

Companies use this pattern for individual developer machines in practice, but it's unsuitable for team environments without additional security measures, such as encrypting secret-containing files.

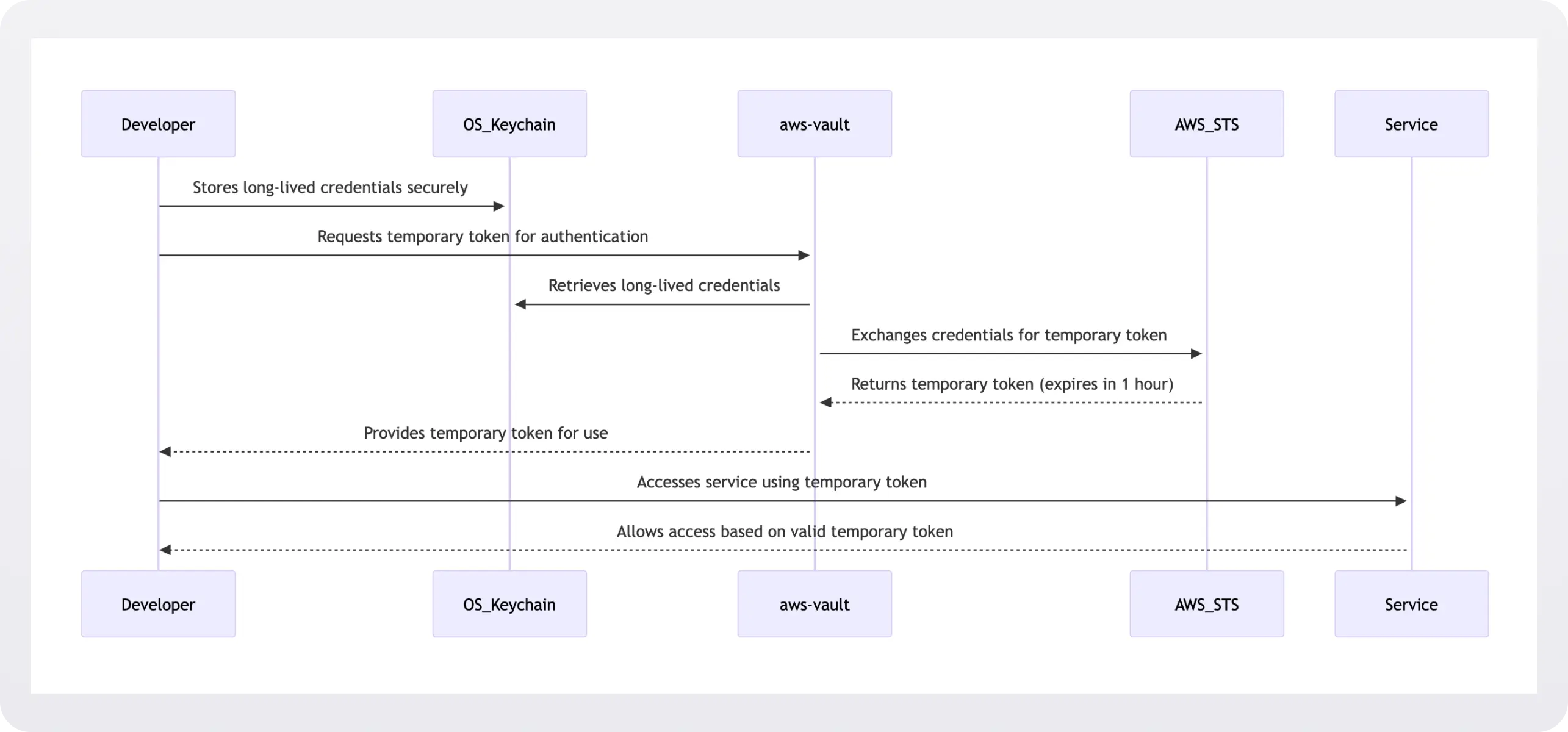

Enhancing security with temporary tokens

It's best to exchange long-lived security credentials at runtime for temporary ones.

AWS's Security Token Service (STS) illustrates this pattern well - it exchanges long-lived credentials for temporary tokens that expire in one hour.

While tools like aws-vault demonstrate this approach effectively by managing temporary token generation, you should implement your own temporary token system tailored to your specific security requirements.

2. Browser-based OAuth flow

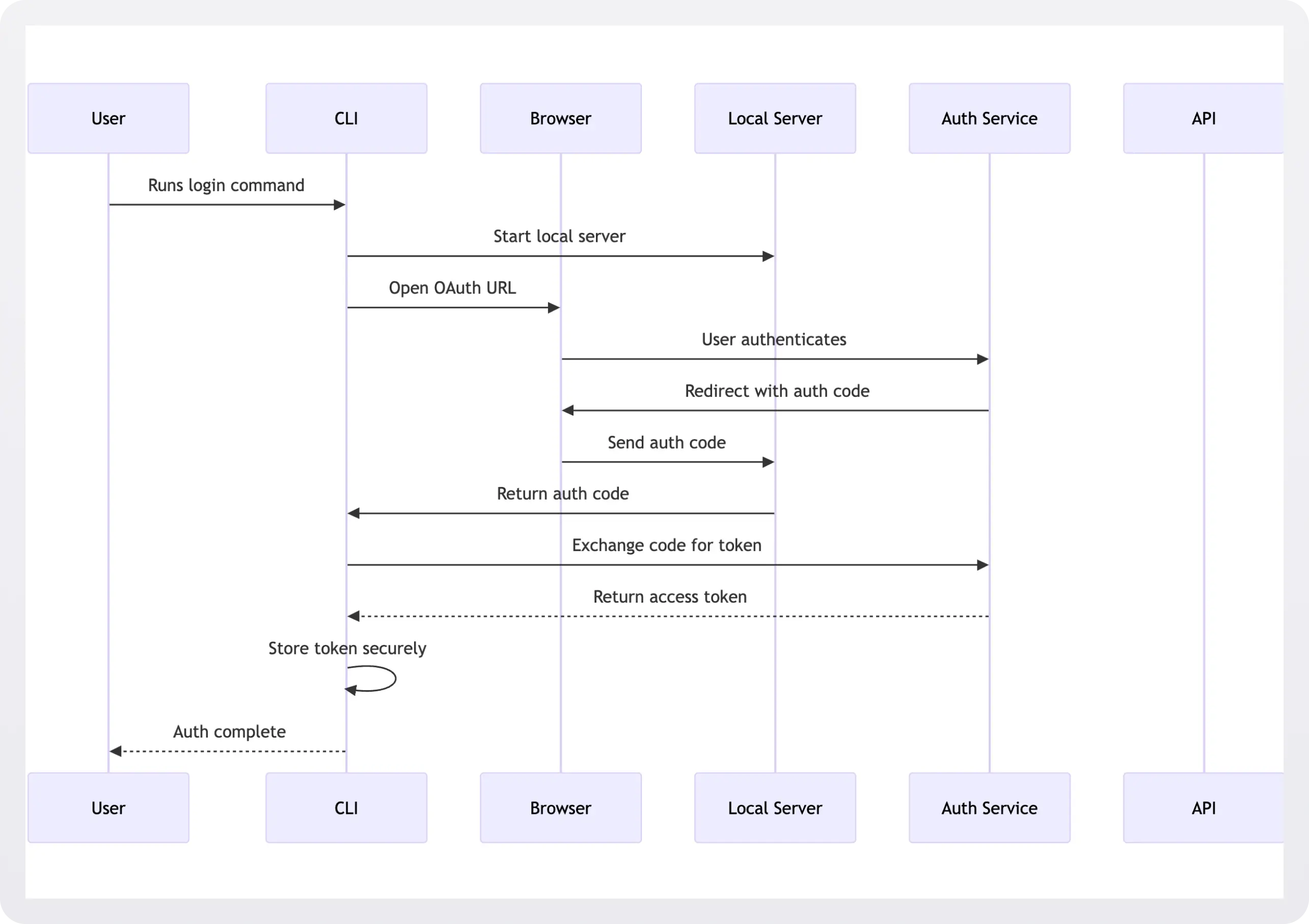

CLIs using OAuth typically launch a browser for authentication, capture the access token via a local callback server, and save it to the filesystem for future requests.

At this point, the local server run by the command-line tool captures the code and exchanges it for an authentication token, which it writes to the filesystem for use in subsequent requests. The developer has logged in via the CLI.

Sample browser-based auth implementation

This example code spins up a server that binds to the developer's localhost on port 8000 to listen for the code returned by the OAuth service specified by authUrl:

When the OAuth provider successfully authenticates the user, it returns a redirect response to the user's localhost and configured port.

The endpoint on the developer's machine captures this code from the query string parameters and exchanges it with the OAuth provider's token endpoint for a secure access token over HTTPS, which it then stores locally.

This pattern provides excellent security through standard OAuth flows and works well with modern authentication features like SSO and MFA.

However, its browser dependency makes it unsuitable for headless environments and automation, such as running in CI/CD via GitHub Actions.

It's also more complex to implement, and there are several potential security issues to defend against.

Browser-based vulnerabilities to avoid

Port binding issues

When starting a local server for OAuth callbacks, binding to all interfaces (0.0.0.0) exposes your authentication server to other machines on the network.

This could allow attackers to intercept OAuth codes or inject malicious responses. Always bind only to localhost (127.0.0.1) to ensure the callback server is accessible only from the local machine.

Cross-Site Request Forgery (CSRF) attacks

Without state verification, attackers could trick users into submitting auth codes from their OAuth flow to your callback server, potentially gaining access to their credentials.

The state parameter acts as a CSRF token, ensuring the callback matches the original request. Generate a secure random state value and verify it in the callback:

Token storage

Storing tokens in plaintext files makes them vulnerable to malware, other users on the system, or accidental exposure through backups or file sharing.

System keychains provide encrypted storage with OS-level access controls, significantly reducing the risk of token theft.

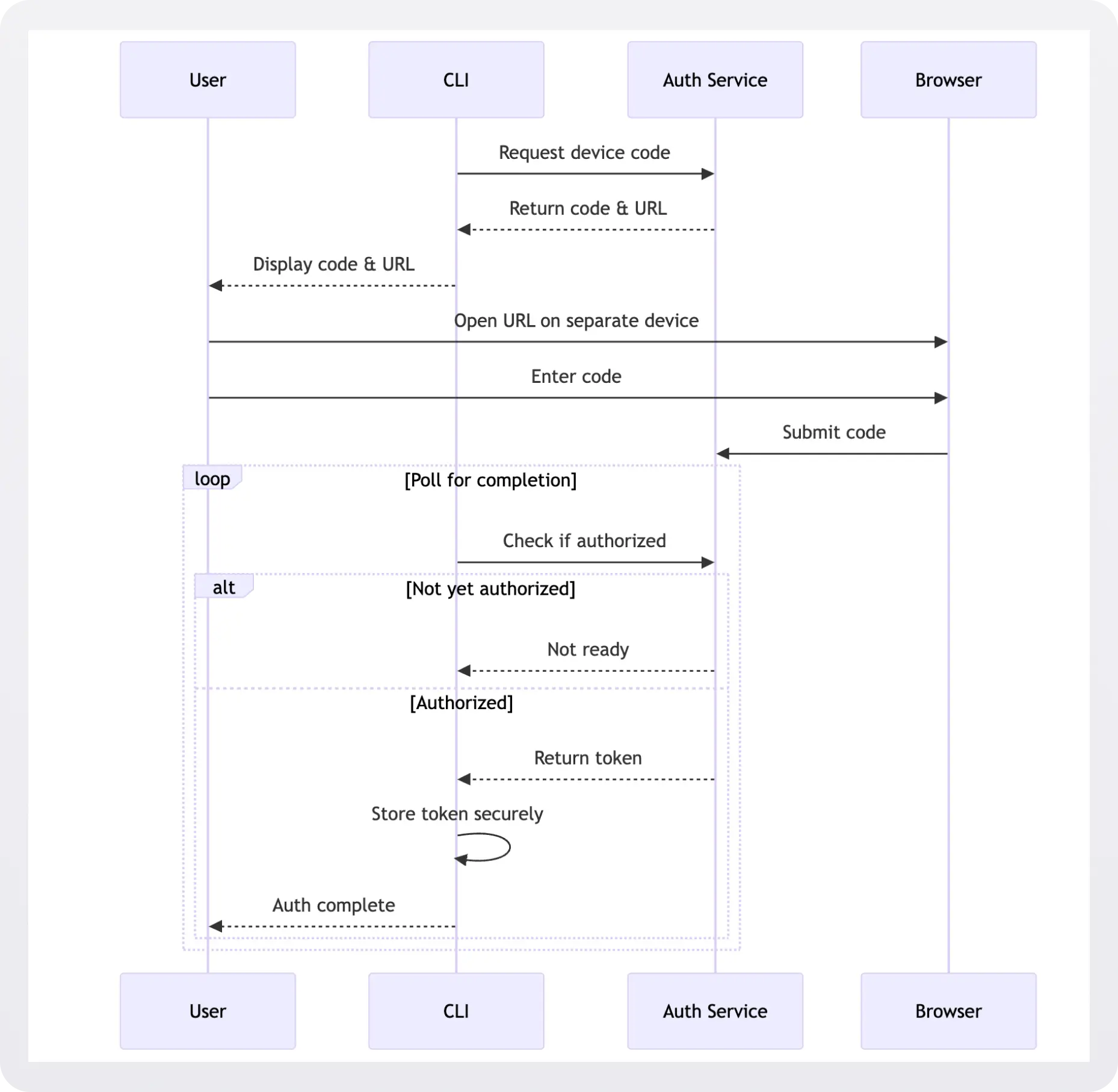

3. Device Code Flow

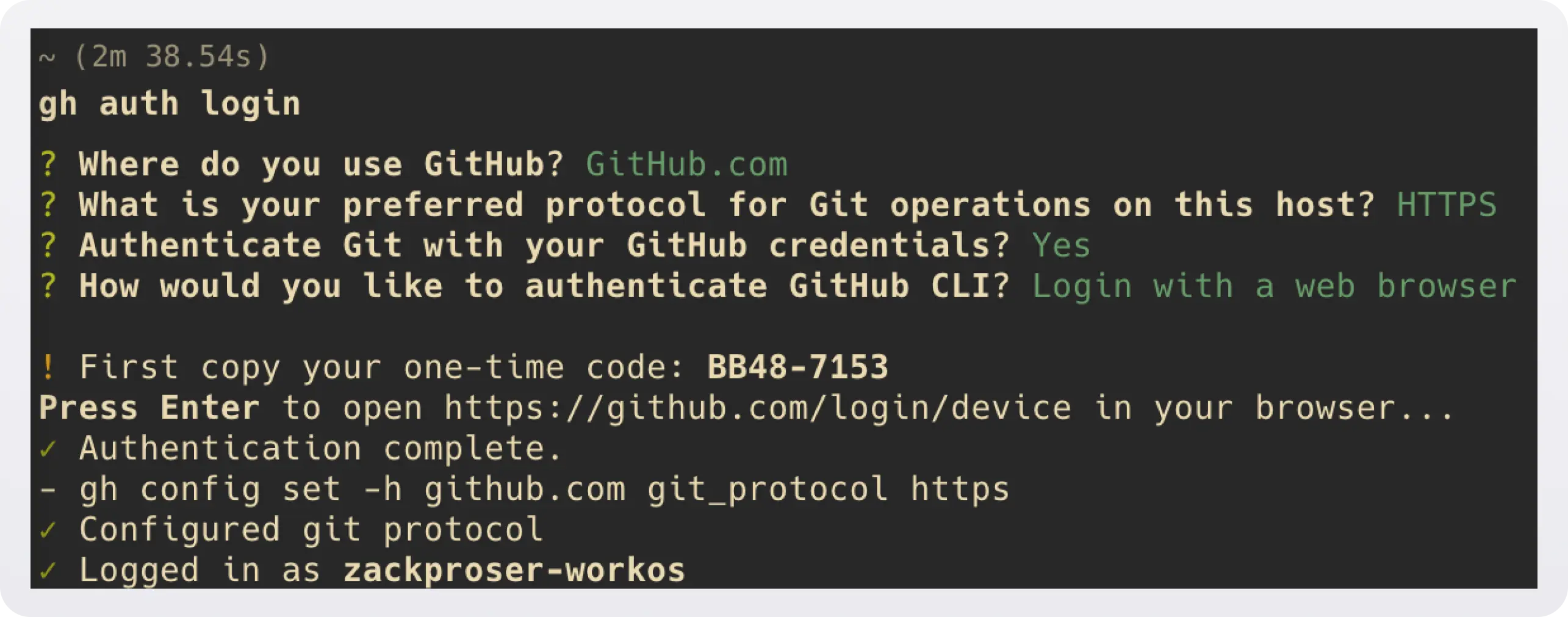

Real-world example: the official GitHub command line tool, gh uses this pattern to authenticate users, and the AWS CLI uses device code flow when a browser is not available.

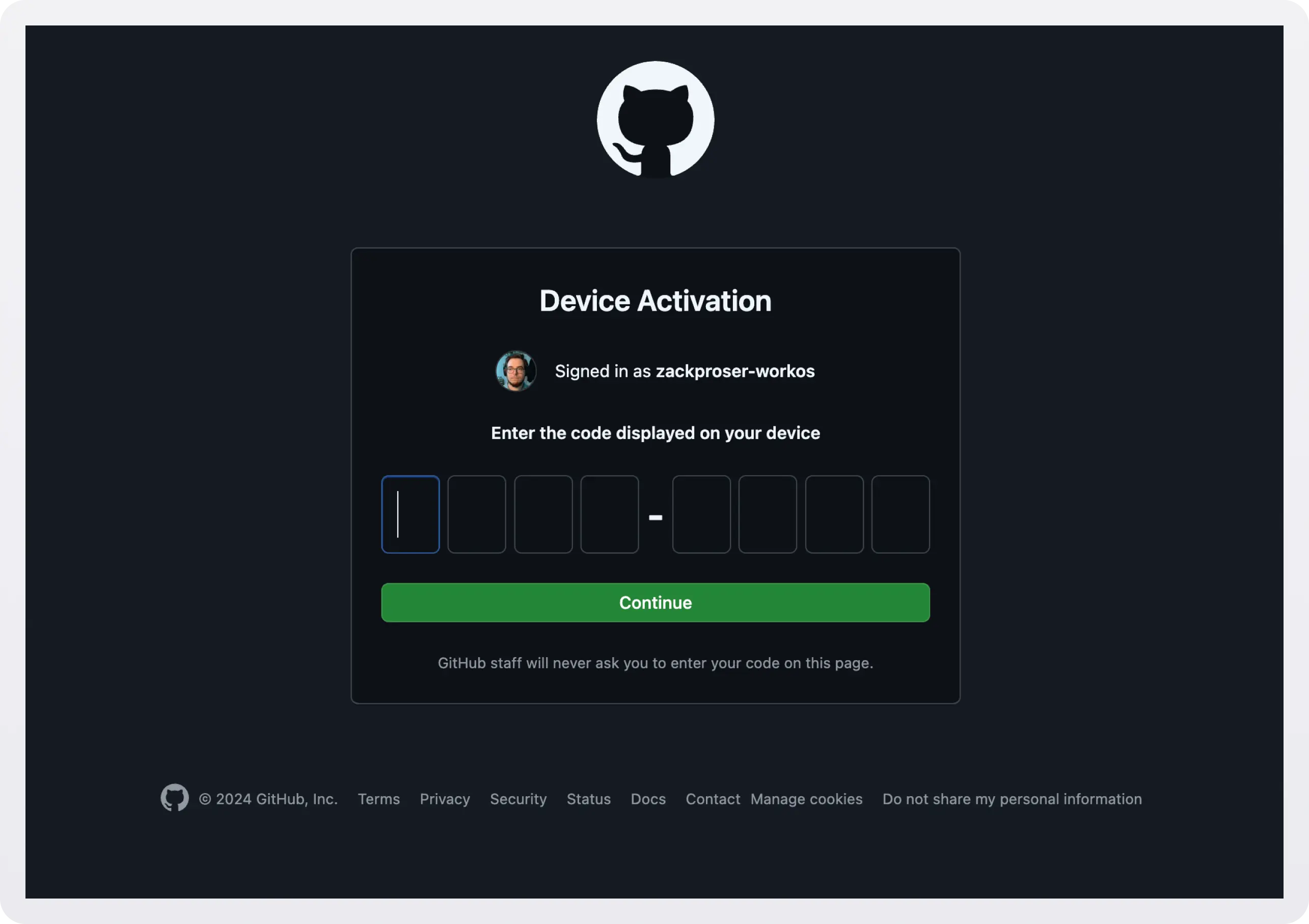

For environments without browsers, the device code flow provides a URL and code that users enter in their browser on another device. First, the user initiates the flow by running gh auth login:

After proceeding through the Device Activation confirmation step, the user must supply the same one-time password (OTP):

When the user enters the correct code, GitHub authenticates their session. The CLI then polls the Authorization Server to check if the user has successfully authenticated:

When tokenResponse.ok is true, it indicates that the Authorization Server has validated the request and included the user’s token in the response. At this point, you can safely extract the token and use it for authenticated requests.

The device code flow solves the browser accessibility problem while maintaining OAuth security benefits but introduces more user friction through manual code entry and polling.

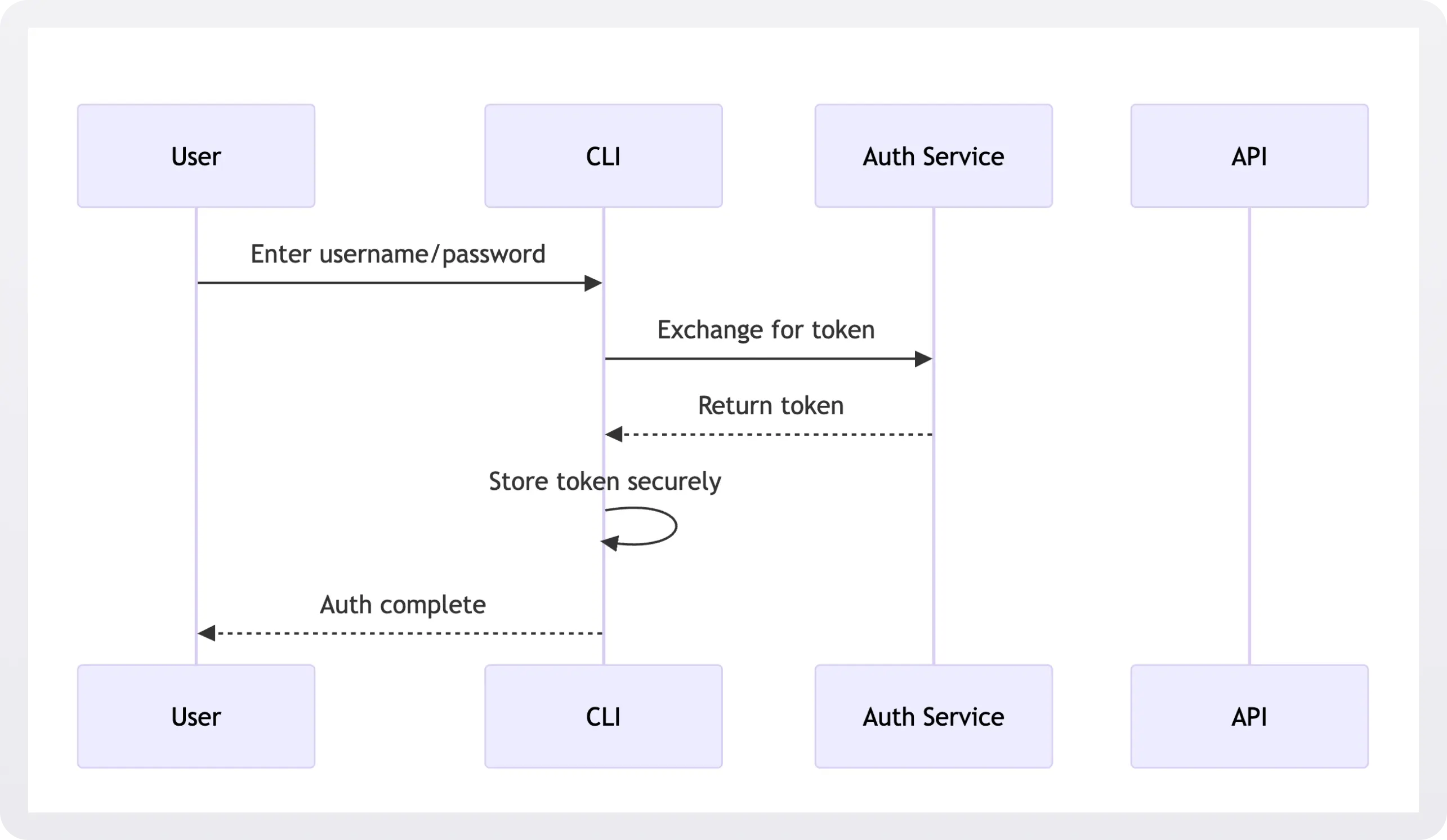

4. Username/Password with Token Exchange

Some CLIs accept traditional credentials and exchange them for tokens, though this pattern is becoming less common due to security concerns.

This pattern is familiar to users and simple to implement, but it requires handling sensitive credentials directly and lacks modern security features. Consider this approach a non-starter for any greenfield development projects.

Token storage and security

Once authenticated, CLIs need to store tokens securely.

1. System keychains

System keychains are built-in secure storage systems provided by operating systems (like Keychain on macOS or Credential Manager on Windows) that encrypt and protect sensitive data using hardware security features. They offer a standardized way to store secrets while letting the OS handle encryption and access control.

System keychains are recommended as the primary storage mechanism because they leverage OS-level security features designed explicitly for credential storage.

They provide encryption at rest and secure memory handling without requiring developers to implement these features.

2. Encrypted configuration files

Many CLI tools use encrypted files to store sensitive credentials, typically in the user's home directory. Rather than implementing encryption from scratch, most use established libraries or formats:

Common Approaches:

- GPG encryption (used by

git-credential-store) - AES encryption with a master key derived from system properties

- Password-based encryption, where users provide a master password

- XDG-compliant encrypted credential storage (used by many Linux tools)

Real-world Examples:

npmuses per-registry encrypted credentials in~/.npmrcpipcan use encrypted configuration viakeyringgradlestores encrypted credentials in~/.gradle/gradle.propertieskubectlstores encrypted cluster credentials in~/.kube/config

This approach allows for portability across environments and machines because encrypted configuration files can be version-controlled, backed up, and restored.

However, careful key management is required to prevent exposure through memory dumps, and this may involve manually secure deletion and decryption steps.

This approach often adds a layer of user interaction for decryption but provides flexibility in various environments. It can complicate deployments or increase the likelihood your users will encounter confusing errors they need support to get through.

3. Environment variables

Environment variables are simple to implement and easy to rotate, and they are the standard approach for CI/CD systems.

However, they're also visible in process listings, can be accidentally logged, and do not provide encryption at rest. There are some critical rules for using environment variables securely:

Runtime injection

- DO: Inject secrets at runtime through your CI/CD platform's secure mechanisms

- DON'T: Store sensitive values in CI/CD configuration files

- DON'T: Echo or print environment variables in build logs

Secrets management solutions

For production workflows, implement a dedicated secrets management solution:

Cloud Provider Solutions

- AWS Secrets Manager

- Google Cloud Secret Manager

- Azure Key Vault

Self-hosted Options

- HashiCorp Vault

- Sealed Secrets for Kubernetes

You can then read your secret out of the secrets manager at runtime while performing your build:

Use CI/CD platform security features

Modern CI/CD platforms provide built-in security features for managing sensitive data:

GitHub Actions

- Repository Secrets

- Environment Secrets

- Organization Secrets

GitLab CI

- CI/CD Variables

- Protected Variables

- Group-level Variables

Jenkins

- Credentials Plugin

- Secrets Management Integration

The idea is to store your secrets in a dedicated secret store and then reference them as needed in your workflow files.

For example, reading an API key via GitHub Actions secrets:

Avoid using environment variables for end-user CLI tools because:

- They require manual user management

- System monitoring might expose them

- They lack the security features of modern credential storage systems

CLI authentication in containerized environments

Container environments create specific challenges for CLI authentication. These arise in two common scenarios:

When users run your CLI tool inside a container:

When your CLI needs to authenticate with containerized services:

These scenarios introduce significant constraints on your auth pattern choices:

- System keychains aren't available inside containers

- Browser-based OAuth flows won't work in containerized environments

- Mounted credential files require careful security consideration

- Environment variables need special handling in containers

Best practices for container authentication

Environment variables (Recommended for CI/CD)

When you run this command, Docker creates a container and makes the API_TOKEN value available only to processes inside that container. You pass the token at runtime but never permanently store it in the container image.

This approach is ideal for CI/CD pipelines where the CI platform manages credentials.

Volume-Mounted Credentials (Development)

This command makes your local credentials available inside the container by mounting your config file from your host machine. The :ro flag makes it read-only, preventing the containerized process from modifying your credentials.

This pattern is helpful during local development when using your existing authentication.

Device Code Flow (Interactive Use)

- Ideal when browser-based OAuth isn't available

- Works in containerized environments

- Requires separate device for auth

The device code flow displays a code you enter in a browser on any device, making it perfect for containers where you can't open a browser directly.

Security considerations for containers

Never bake credentials into container images

A common but dangerous mistake is embedding credentials directly in your Docker image. Here's an example of what NOT to do:

This pattern is dangerous because:

- The API key becomes part of the container image

- Anyone who pulls the image can extract the key:

- You cannot rotate the credential without rebuilding the image

- The credential is visible in your Dockerfile and build logs

Instead, always pass credentials at runtime. Define environment variables in your Dockerfile:

and pass them into the container:

Additional security measures

Read-only credential mounts: When mounting credential files, always use read-only mode (:ro) to prevent containerized processes from modifying them.

Docker secrets: For Docker Swarm deployments, use Docker's built-in secrets management:

Environment variable hygiene:Clear sensitive environment variables when possible and avoid printing them in logs:

Temporary tokens:Use short-lived tokens when possible, especially in containers.Choosing the Right PatternFor containerized environments:

- CI/CD pipelines → Environment variables (managed by CI platform) and passed into the container at runtime - NEVER “baked” into the image or hardcoded

- Local development → Volume-mounted credentials + device code flow

- Production services → Cloud provider secret management (AWS Secrets Manager, Google Secret Manager, etc.)

CLI auth anti-patterns to avoid

The most secure CLI auth implementations:

- Minimize the lifetime of sensitive credentials

- Provide a smooth user experience

- Fail securely and gracefully

Here are the common pitfalls to avoid when implementing auth in your command line tool:

- Insecure storage of long-lived credentials

- ❌ Storing API keys in plaintext files

- ❌ Hardcoding credentials in source code

- ✅ Instead: Use system keychains or encrypted storage

- Unsafe local server implementation

- ❌ Using a fixed callback port for OAuth

- ❌ Accepting callbacks without state verification

- ❌ Running callback server without localhost binding

- ✅ Instead: Use dynamic ports, verify state parameter, bind to localhost only

- Poor token management

- ❌ Sharing tokens across environments

- ❌ Not implementing token refresh

- ❌ Using the same token for different permission levels

- ✅ Instead: Use environment-specific tokens with appropriate scoping

- Compromised user experience

- ❌ Requiring re-authentication for every command

- ❌ Mixing authentication methods inconsistently

- ❌ No clear path to recover from authentication failures

- ✅ Instead: Implement secure token caching and clear error recovery

- Risky environment handling

- ❌ Using browser-based flows in CI/CD

- ❌ Storing persistent tokens in containers

- ❌ Sharing credentials across development and production

- ✅ Instead: Use appropriate auth patterns for each environment

Choosing the right CLI auth pattern

The authentication pattern you choose shapes both your CLI's security posture and user experience. Remember these key guidelines for common scenarios:

- Developer tools & SDKs: Browser-based OAuth flows provide the ideal balance of security and usability. They leverage existing auth systems, support SSO/SAML integration, and offer a familiar user experience.

- CI/CD & automation: Temporary tokens with strict scoping and automatic rotation minimize risk while enabling automated workflows.

- Enterprise deployments: Device code flow combines the security benefits of OAuth with the flexibility to authenticate from any environment, including containers and headless systems.

.webp)

_.webp)