Composio.dev overview

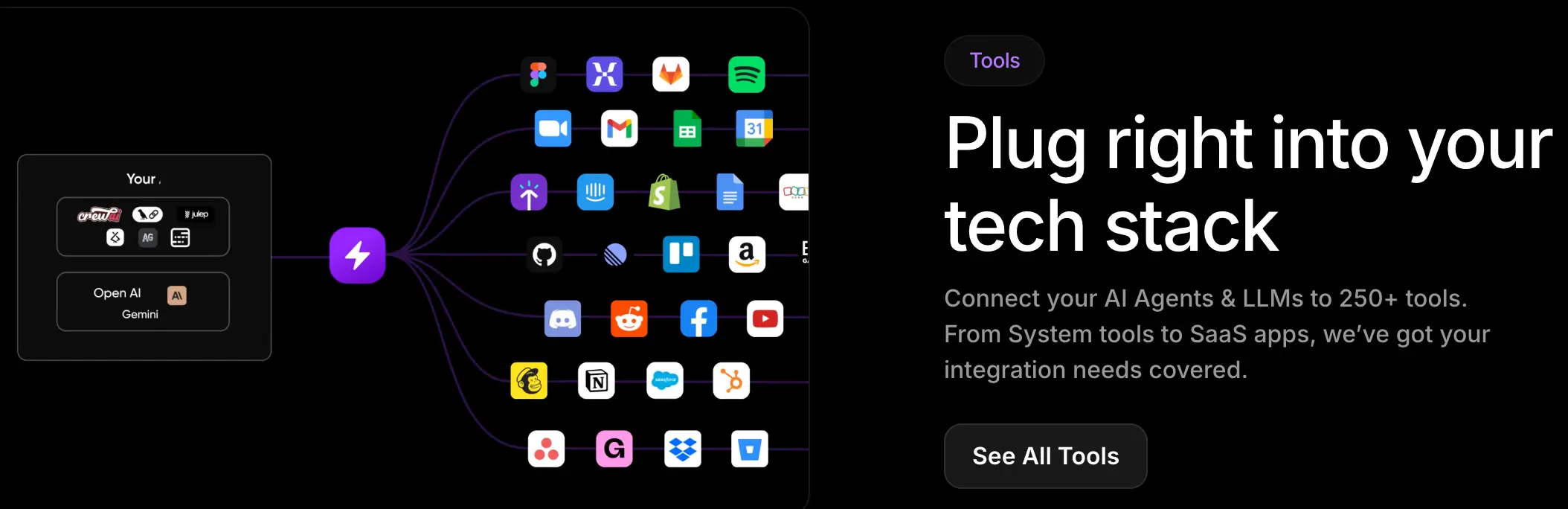

Composio.dev is a developer-focused integration platform that simplifies how AI agents and large language models (LLMs) connect with external applications and services.

Its core mission is to simplify the process of turning LLM-driven prototypes into production-ready solutions—so teams can focus on functionality and user experience instead of wrestling with complicated integrations.

Key features

Agentic integration framework

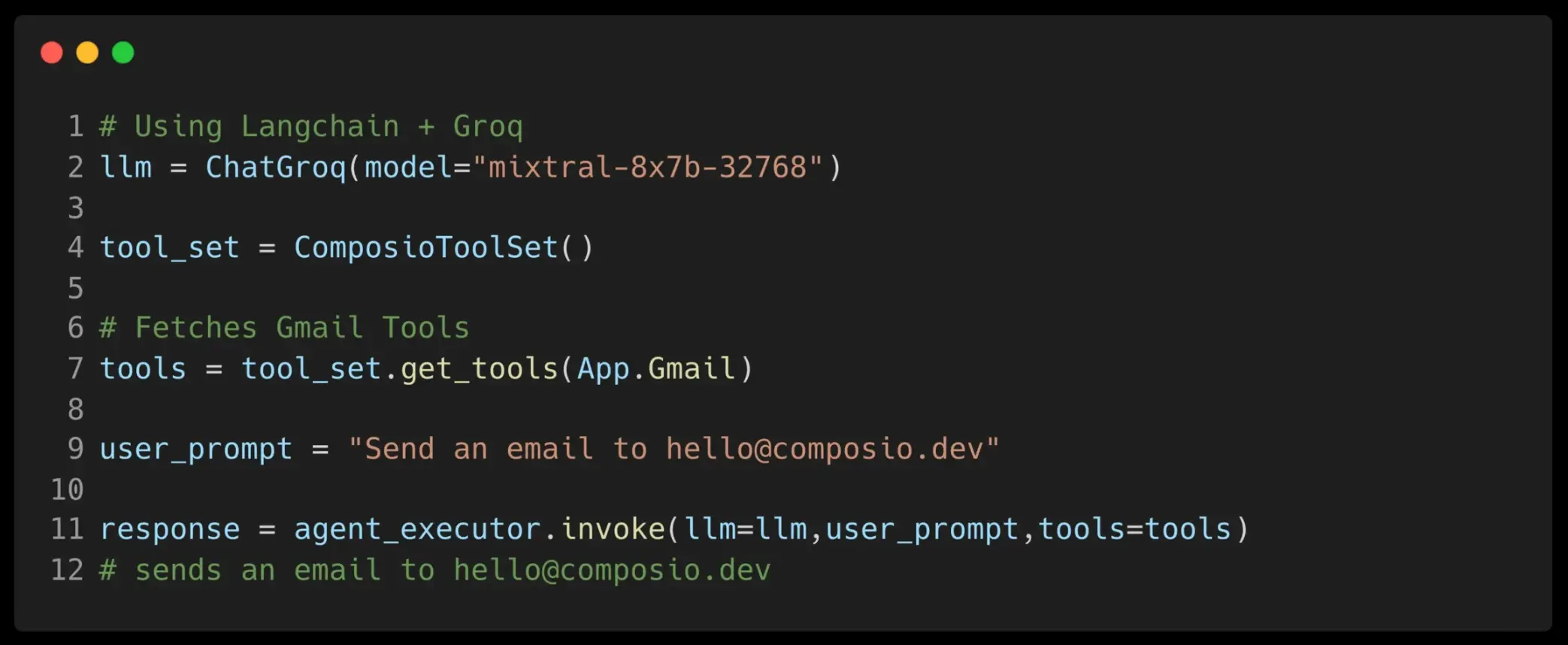

Composio provides a consistent way to connect LLMs (like OpenAI’s GPT models) with real-world tools, third-party APIs, and internal services, similar to how Arcade.dev works.

You can package integrations as “tool sets” that drop into your AI workflow—like Gmail APIs, Slack integrations, or internal business workflows.

Unified configuration and management

Credentials, rate limits, and library dependencies are managed in one place. This centralization helps you avoid the friction of wiring up multiple SaaS platforms or data sources on your own.

Developer-first approach

Composio meets you on your terms—through CLIs, SDKs, and environment variables that fit DevOps best practices. You can quickly fetch a tool set (e.g., App.Github) and integrate it into your LLM application with minimal boilerplate.

Scalability and reliability

The platform includes built-in monitoring, failover, and concurrency controls. Whether you’re handling dozens or hundreds of tasks at once, Composio aims to keep everything resilient and performant.

Use cases and case studies

From startups building MVPs overnight to large enterprises rolling out advanced AI-driven features, Composio highlights how teams quickly streamline integration tasks and deliver real value.

Security and compliance

Composio provides secure credential management and addresses common compliance needs, so you can implement AI features without worrying about the pitfalls of homegrown security solutions.

Example: Build a workflow to Star a GitHub Repo with an AI agent

Below is an example of how to use Composio to build a JavaScript workflow with an LLM “star” a GitHub repository and print an ASCII octocat. Composio will discover and provide the relevant GitHub tools, and your LLM will call them automatically.

Install and configure

Install composio and any dependencies:

npm install composio_openai openai dotenv

Get your Composio API key

Sign up or log in to Composio and generate your API key. Then store it in your environment (e.g., .env file):

echo "COMPOSIO_API_KEY=YOUR_API_KEY" >> .env

Connect GitHub

Use the CLI to add your GitHub account:

npx composio-core add github

Follow the prompts to authenticate and link your GitHub account.

JavaScript Implementation

import 'dotenv/config';

import { OpenAI } from 'openai';

import { ComposioToolSet } from 'composio_openai';

// 1. Initialize OpenAI + Composio

const client = new OpenAI({

apiKey: process.env.OPENAI_API_KEY, // Or however your OpenAI key is stored

});

const toolset = new ComposioToolSet();

// 2. Discover & Fetch Tools

const actions = await toolset.findActionsByUseCase({

useCase: "star a repo, print octocat",

advanced: true

});

const tools = await toolset.getTools({ actions });

// 3. Define the Task & Conversation

const task = "star composiohq/composio and print me an octocat.";

const messages = [

{ role: "system", content: "You are a helpful assistant." },

{ role: "user", content: task },

];

// 4. Main Interaction Loop

while (true) {

const response = await client.chat.completions.create({

model: "gpt-4o", // Example model

tools,

messages

});

// If no tools are needed, we've got our final answer

if (response.choices[0].finish_reason !== "tool_calls") {

console.log(response.choices[0].message.content);

break;

}

// 5. Execute tool calls

const result = await toolset.handleToolCalls(response);

// 6. Store the conversation with the tool calls

messages.push({

role: "assistant",

content: "",

tool_calls: response.choices[0].message.tool_calls

});

for (const call of response.choices[0].message.tool_calls) {

messages.push({

role: "tool",

content: JSON.stringify(result),

tool_call_id: call.id

});

}

}How it works

Discover and fetch tools

Composio scans your use case and returns the best-suited GitHub integration actions and tools.

LLM-Driven tool calls

The LLM analyzes the conversation and decides when (and how) to invoke the GitHub “star” action or any other relevant tool.

Context updates

Each interaction updates messages with both the LLM’s requests and the results of those requests, creating a dynamic feedback loop.