IBM’s Agent Communication Protocol (ACP): A technical overview for software engineers

IBM Research’s Agent Communication Protocol (ACP) provides autonomous agents with a common “wire format” for talking to each other. But how does it differ from MCP and A2A?

Why ACP and why now?

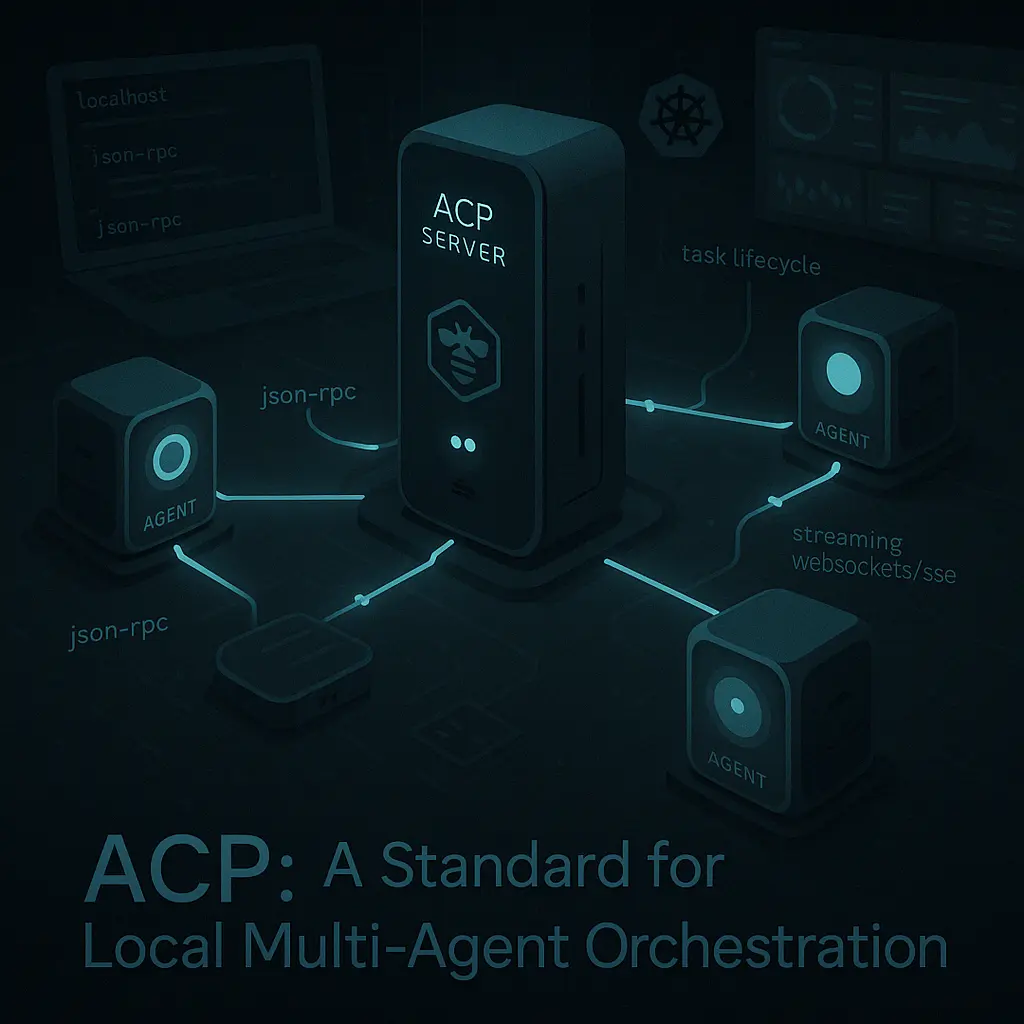

Born within the open-source BeeAI project, ACP tackles a pain point we face when an LLM-powered bot needs to call another bot: every framework invents its own JSON shape, authentication story, and streaming hack.

ACP proposes one vocabulary (JSON‑RPC over HTTP/WebSockets) plus a small control plane so your agents can discover, authenticate, and cooperate without bespoke glue code.

From MCP to ACP

Anthropic’s Model Context Protocol (MCP) standardizes model-to-tool wiring—the “USB-C port” that lets an LLM mount data sources and APIs. ACP moves one layer up the stack: it defines agent‑to‑agent messaging, task hand‑off, and lifecycle, picking up where MCP leaves off.

Google’s Agent-to-Agent (A2A) spec focuses on cross-vendor discovery on the public internet.

ACP starts local-first, optimizing for clusters or laptops running multiple cooperating agents.

For a comparison of all three, see MCP, ACP, A2A, oh my!

Architectural foundations of ACP

BeeAI keeps everything local‑first—for instance, you can spin up a lightweight Ollama server (a wrapper around llama.cpp that pulls and hosts open‑source models with a single command) and load quantised models in the modern GGUF format, a compact binary container optimised for fast inference on commodity CPUs and GPUs.

Key protocol capabilities

- Dynamic discovery – Agents advertise a short manifest; the server auto‑indexes them so peers can look up functions and schemas.

- Task delegation & routing – A structured message envelope carries a task id, metadata, and optional stream channel so work can be chunked or resumed.

- Stateful sessions – Pre‑alpha ACP adds persistent contexts so a long‑running planner agent can survive restarts.

- Security

- Capability tokens – Unforgeable, signed objects that encode resource type, ops, and expiry; trivially verifiable by any agent.

- Kubernetes RBAC bridge – Map capability claims onto existing cluster roles to avoid a new policy silo.

- Observability – All calls are OTLP‑instrumented; BeeAI ships traces to Arize Phoenix out of the box.

ACP vs Its Peers

ACP intentionally re‑uses MCP’s message types where possible; nothing stops you from running MCP inside an ACP payload if an agent needs external data.

Likewise, nothing prevents an ACP agent from exporting a Google Agent Card, allowing it to participate in an A2A mesh.

Implementation details

SDKs and tooling

acp‑python‑sdk, acp‑typescript‑sdk

Thin async clients for issuing calls, subscribing to streams, and validating manifests.

BeeAI CLI / UI

beeai run my‑agent to launch; graphical chat at localhost:8333 to inspect logs and traces.

Agent lifecycle

INITIALIZING → ACTIVE → DEGRADED → RETIRING → RETIRED

Lifecycle metadata (version, createdBy, successorAgent) is emitted as OpenTelemetry spans, so ops teams can automate rollouts or garbage-collect zombies.

Communication patterns

- Sync: plain HTTP POST returning JSON.

- Async: fire‑and‑forget with taskId; poll or subscribe for progress.

- Streaming: server pushes incremental delta messages over WebSockets/SSE—ideal for long RAG chains writing partial answers.

Where ACP fits in real-world workflows

Think of ACP as the connective tissue you reach for whenever a set of heterogeneous agents needs to behave like a tight, in‑house micro‑service cluster—even when those agents come from different vendors or open‑source projects.

Enterprise workflows

In an enterprise automation setting, a workflow orchestration bot might receive a purchase order event, hand it to a policy-checking agent for compliance, and then pass the approved order to a reporting agent that closes the books.

Because each step is wrapped in ACP, the finance team can mix IBM’s own agents with domain‑specific Python services the developers already trust. At the same time, BeeAI’s capability tokens keep every call scoped to the minimum permissions required.

Security teams

Security teams use a similar pattern: an ingest agent streams log lines from CrowdStrike, passes suspicious hashes to a threat intelligence agent for enrichment, and then routes confirmed indicators to a mitigation planner, which creates Jira tickets or triggers Terraform pull requests.

All three pieces are decoupled yet auditable because ACP’s observability hooks push traces into the SOC’s existing OTEL pipeline.

Data scientists and researchers

For data‑science squads, ACP shines when you want a private research group on your laptop: spin up a crawler agent, a vector‑index builder, and a notebook‑authoring agent in BeeAI; they discover each other automatically and trade JSON‑RPC calls over localhost.

When the analysis is done, you can retire the agents cleanly, leaving no orphaned sockets or stray GPUs locked.

Creatives and development teams

Creative and engineering teams are already wiring ACP‑compatible code‑generation, review, and design agents into a loop: a spec‑to‑code generator writes the first draft, a static‑analysis agent flags issues, and a UI‑review agent suggests visual tweaks.

Private, airgapped workflows

Because everything is local‑first, you keep proprietary IP inside the company network while still benefiting from best‑of‑breed open‑source agents.

Getting Started with ACP (via BeeAI) — the Official Quick‑Start

Spinning up a local ACP testbed now takes two commands on any Homebrew‑enabled machine: install the BeeAI service tap, start it, and you’re ready to point a browser or terminal at a freshly booted cluster of reference agents.

Step 1. Install and start the BeeAI daemon

brew install i-am-bee/beeai/beeai # installs BeeAI plus its launch‑agent

brew services start beeai # runs it in the background at bootHomebrew handles upgrades (brew upgrade beeai) and restarts (brew services restart beeai) for you.

Step 2. Configure your local LLM provider

beeai env setup # select a provider:

# • Ollama – run models locally via http://localhost:11434

# • OpenAI / Together.ai – call hosted APIsThis one‑time wizard stores API keys and model endpoints in ~/.config/beeai/env.

Step 3. Launch the web UI (optional but handy)

beeai ui # opens http://localhost:8333

The UI lets you inspect running agents, stream logs, and issue ad‑hoc ACP calls from a chat console.

Step 4. Work with the CLI

beeai —help # show all commands

beeai list # enumerate installed agents

beeai run chat # start the reference chat agent

beeai compose sequential # chain agents into a mini‑pipelineBecause every CLI command is just a wrapper around ACP JSON‑RPC calls, you can swap in your own agents or wire this into CI without changing a line of code.

Step 5. Dig deeper

- Full docs – https://docs.beeai.dev covers advanced transports, capability tokens, and OTEL hooks.

- Agent library – Browse ready‑made scraping, RAG, or code‑review agents at https://beeai.dev/agents.

Final thoughts

IBM’s ACP gives developers a pragmatic on‑ramp to the multi‑agent future: a protocol that feels as lightweight as REST yet is opinionated enough to keep discovery, security, and observability consistent across a swarm of heterogeneous agents.