Understanding MCP features: Tools, Resources, Prompts, Sampling, Roots, and Elicitation

Learn how to leverage Model Context Protocol’s six core features to build secure and scalable AI applications.

Model Context Protocol (MCP) is quickly becoming a foundational layer for enabling large language models (LLMs) to interact with external tools and data. Whether you're building a custom MCP server or integrating with one as a client, understanding its features is essential to fully leverage its capabilities.

This guide walks you through the six core MCP features—Tools, Resources, Prompts, Sampling, Roots, and Elicitation—and explains how and when to use each. Let’s dive right in.

Part 1: Building MCP servers

When you build an MCP server, you’re exposing capabilities to clients. Your job is to make it easy for clients to query data, invoke actions, and guide the model with reusable instructions. MCP provides three main features for this.

1. Tools: What your server can do

Tools are specific actions your MCP server exposes to a client, like built-in functions or APIs that an LLM can call during a conversation. When a user asks for something that requires doing work (fetching data, triggering an operation, or performing a calculation) the model chooses the appropriate tool to handle it.

Each tool is schema-defined and does a specific operation with typed inputs and outputs.

Each tool execution requires explicit user approval, thus making sure that users maintain control at all times.

For example, this is the definition of a tool that books a hotel room:

An MCP server supports 2 methods per protocol:

tools/list: Used to discover available tools that the server supports. Returns an array of tool definitions with schemastools/call: Used to execute a specific tool. The method returns the tool’s execution result.

How to combine Tools

Tools enable AI applications to perform actions on behalf of users. They can also be combined to handle a multi-step process. Importantly, each tool execution requires explicit user approval, ensuring full transparency and control over every action.

Imagine a travel planning assistant using several MCP tools to help book a vacation:

- Search for flights: The MCP server queries multiple airlines and returns structured flight options for the user to choose.

- Book a hotel: Once a flight is selected, the MCP server returns available hotel options, and after user approval, finalizes the booking.

- Add the stay to the calendar: After user approval, the MCP server finalizes the booking and adds the stay to the user’s calendar.

- Send out-of-office notifications: The MCP server sends out-of-office notifications for the duration of the trip.

2. Resources: What your server knows and shares

Resources represent read-only, persistent data that your server exposes to clients. The sources of this data can be files, APIs, databases, or any other source. Unlike tools, resources don’t perform actions. Instead, they make information available for browsing, searching, and referencing.

Resources are essential for enabling models to look up facts or structured information without triggering an operation.

Each MCP server defines collections or documents as resources. These can also be organized into categories (called Roots) for easier navigation. The MCP clients can fetch, filter, or search these resources on demand.

Each resource is identified by a unique URI (e.g., file:///path/to/document.md) and declares a MIME type, allowing clients to handle different content formats correctly (e.g., Markdown, JSON, PDF).

For example, an MCP server can share an employee directory:

MCP also supports Resource Templates, which allow clients to dynamically access resources by filling in URI parameters. A template includes metadata like title, description, and expected MIME type, making it discoverable and self-documenting. Clients can substitute variables in the URI to query specific data.

For example, a template like travel://activities/{city}/{category}, can provide access to activities of several categories for various cities, e.g., travel://activities/barcelona/museums.

How to use Resources

Imagine an AI-powered HR assistant that helps managers find employee information quickly.

If a manager asks: “Who’s the engineering lead for the mobile app team?”, the model can query the resource, browse the employeeDirectory, find the lead, and respond instantly without calling any tools.

Resources can be combined with tools. For instance, the AI-powered HR assistant might be asked to find the engineering lead and then use a sendMessage tool to notify them about a project update.

Resources are ideal for static or semi-static information, ensuring that models can access rich context without performing unnecessary operations.

3. Prompts: Reusable templates for your server

Prompts are predefined instruction templates your server provides to clients. They’re designed to standardize how models perform common tasks and to save developers from repeatedly crafting instructions.

Prompts help ensure consistency and best practices across teams and applications using your MCP server.

MCP Servers define a prompt template with variables that clients fill in dynamically. The clients retrieve these prompts and use them to guide the model during inference.

Prompts can be versioned and updated centrally without changing client code. They are explicitly invoked by the user or client, they don’t run automatically. They can also be context-aware, dynamically referencing available resources and tools to build more complete and efficient workflows.

For example, this is a prompt for an AI-powered travel assistant:

Bringing it all together: Multi-server coordination

The real power of MCP shines when multiple servers, tools, resources, and prompts work seamlessly together. Let’s see how we can combine tools, resources, and prompts to build a powerful workflow.

Scenario: Company offsite planning

Consider an AI assistant that helps you plan a company offsite. The assistant is connected to three MCP servers:

- Conference Server: Handles venue booking, catering, and attendee registration.

- Travel Server: Manages flights and hotels for participants.

- Calendar/Email Server: Organizes schedules and sends communications.

1. User starts with a prompt

The user invokes a prompt to organize a company offsite event:

This prompt sets the high-level intention for the entire workflow.

2. Selecting resources

To provide context, the user selects relevant resources:

calendar://company-calendar/March-2025(from Calendar Server): Existing scheduled events.conference://preferences/venues(from Conference Server): Past venue preferences.travel://corporate-policies(from Travel Server): Company travel guidelines.

These resources help the assistant understand preferences, constraints, and availability.

3. AI gathers context

- Reads the company calendar to avoid schedule conflicts.

- Uses venue preferences to understand past feedback (e.g., “prefer seaside locations”).

- Consults corporate travel policies for flight and hotel booking rules.

4. Coordinated tool execution

With context established, the AI requests user approval to execute a series of tools:

- Conference Server:

- Travel Server:

- Calendar/Email Server:

5. Unified plan and user confirmation

The AI:

- Compiles flight options, venue availability, hotel suggestions, and catering menus.

- Presents a single, unified plan to the user for review.

- Once approved, automatically books venues, hotels, and flights, adds the event to calendars, and sends attendee emails.

This flow demonstrates:

- Prompts initiating a complex, multi-server workflow.

- Resources providing contextual data for informed decision-making.

- Tools executing concrete actions across different servers.

Together, these MCP features create a coordinated, end-to-end planning experience where the user retains full control of every step.

Part 2: Building MCP clients

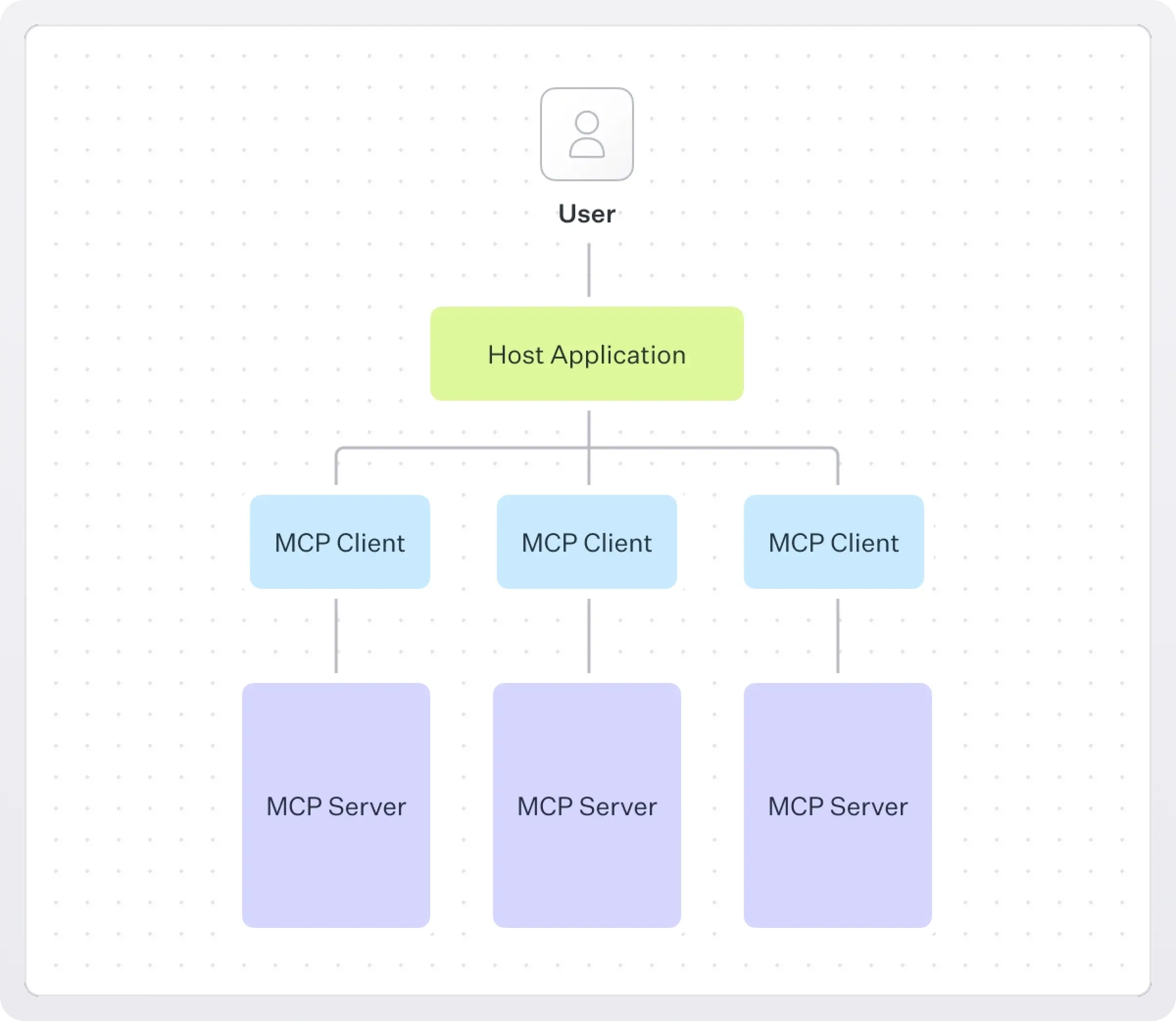

When building an MCP client, you're creating the component that connects directly to an MCP server. But clients don’t exist on their own; they are instantiated by a host application, like a code editor or a browser extension.

The host application manages the overall user experience:

- It handles the user interface, session memory, and prompt orchestration.

- It can coordinate multiple clients, each connected to a different server.

Meanwhile, each client is a protocol-level component that maintains one-on-one communication with a single server. Its job is to:

- Discover tools, resources, and prompts exposed by the server

- Sample and organize relevant context for the model

- Elicit missing information when needed

Understanding this distinction is crucial:

- The host is what users interact with

- The client is what enables that host to talk to specific servers through MCP

The next sections explain how to build smart clients using Sampling, Roots, and Elicitation, three features that help optimize context, organize data, and manage interactive flows.

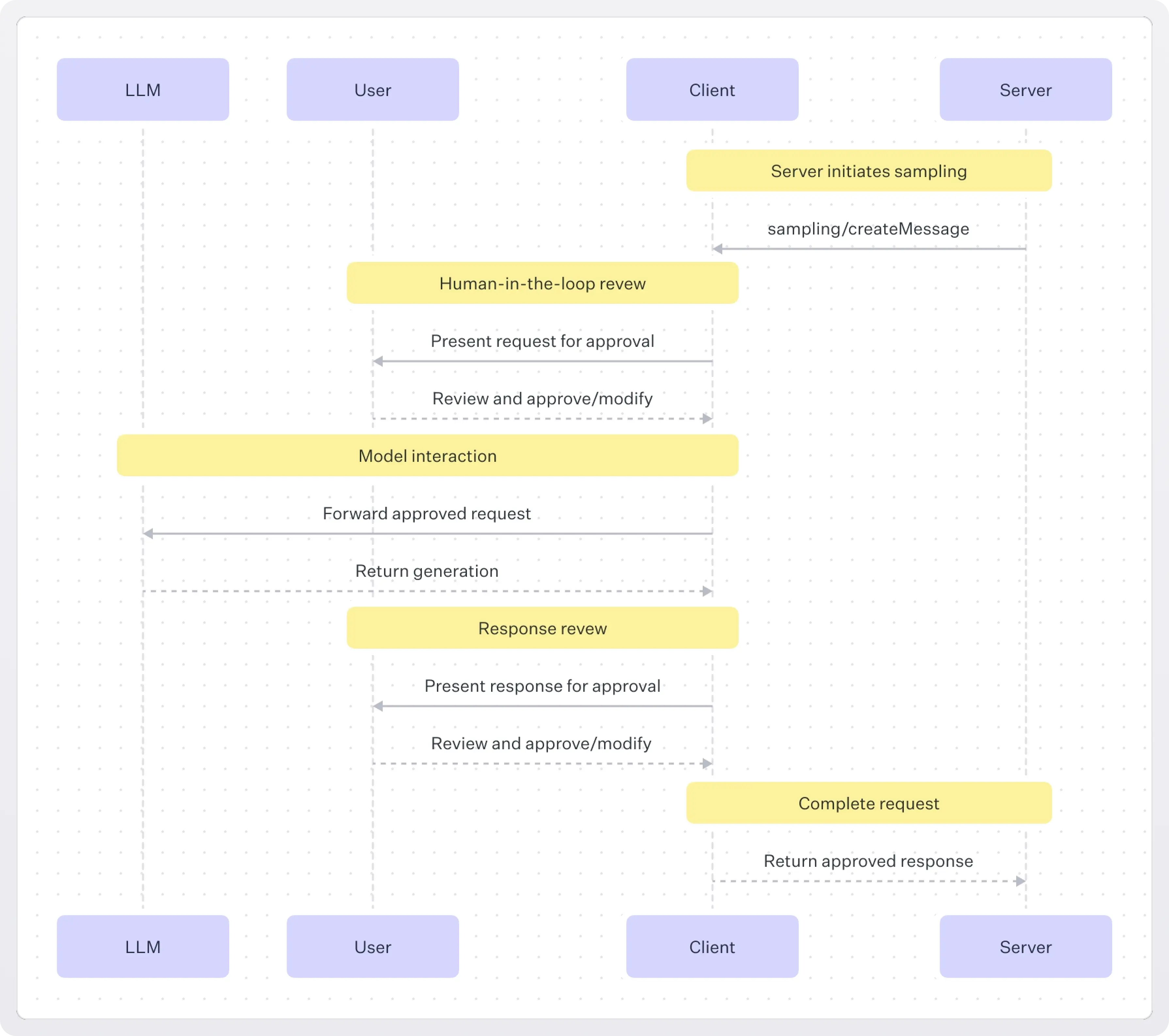

1. Sampling: Letting MCP servers use your AI model safely

Sampling allows an MCP client to run model completions on behalf of a server, so that the server can offload AI reasoning without directly integrating or paying for a model API.

This feature is especially powerful for agentic workflows where a tool (on the server) needs model-generated output to complete its task. Instead of every MCP server needing its own AI model connection (which is expensive and complex), it can say: “Hey client, you already have access to Claude or GPT, can you run this piece of reasoning for me and give me the result?”

This is how a sampling flow works:

- The server initiates a sampling request to the client.

- The client asks the user to approve or edit the request and later review the model’s response.

- The completion happens within the client’s model context (e.g., Claude or GPT), not on the server.

- The server gets back only the user-approved result.

This approach maintains strong security and context isolation:

- Servers never see the full conversation or call the model directly.

- Clients enforce user approval, rate limits, and data validation.

Example: Personal activity planner

- The travel server fetches a long list of activities for Rome: museums, tours, restaurants, and events.

- It wants to recommend the top 5 activities based on the user’s interests (history, art, food) but doesn’t have AI logic built in.

- It sends a sampling request to the client:

- The client prompts the user: “The server wants to use your AI model to rank activities for your preferences. Approve?”

- After approval, the client runs the task through the model (e.g., Claude).

- The user reviews the AI’s top 5 recommendations before they’re sent back to the server.

2. Roots: Defining filesystem boundaries

Roots are how MCP clients tell servers which parts of the filesystem they can access. Instead of giving a server unrestricted access to every file, clients define safe, specific directories called “roots.”

Roots act like workspaces or boundaries; they guide servers to the relevant files while ensuring security and user control.

Each root is a file URI (e.g., file:///Users/agent/travel-planning). Clients provide these roots to servers when they start or when users open new folders. Servers use these boundaries to read, write, or search files but cannot access anything outside the defined roots.

Clients can dynamically update the roots list and notify servers when users switch projects or folders.

Example: Business conference organizer

An event planner uses an MCP-powered assistant to manage conferences. Their filesystem is organized like this:

file:///Users/planner/conference-2025→ Main workspace for current eventfile:///Users/planner/vendor-contracts→ Catering, venue, and vendor agreementsfile:///Users/planner/marketing-assets→ Brochures, banners, and logos

The MCP client defines these as roots:

With these boundaries, the MCP server can:

- Save updated attendee lists to conference-2025.

- Access vendor agreements to automatically extract payment deadlines.

- Use marketing materials to prepare a promotional kit.

It cannot access unrelated files outside these roots, such as personal documents or other projects.

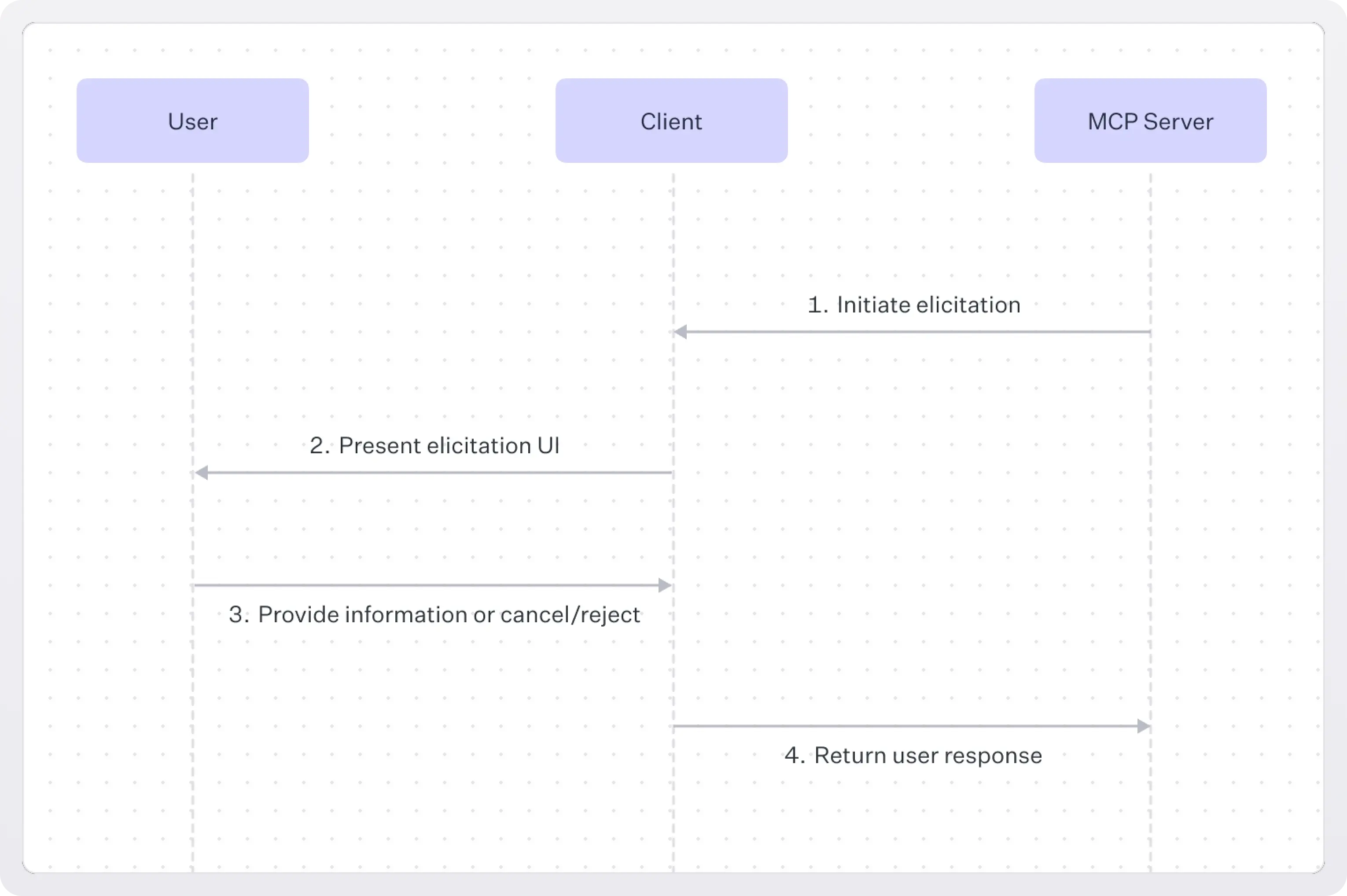

3. Elicitation: Request user input at runtime

MCP elicitation is a mechanism that lets a model (or model-serving system) ask the client for missing or additional context during a session. This is especially useful when the model’s prompt or logic refers to a variable that hasn't yet been resolved, like {{current_user}}, {{timezone}}, or {{organization.name}}.

Instead of hardcoding all context up front or relying on guesswork, elicitation introduces a formalized way for the model to say: "I need more information before I can respond accurately. Please provide it."

!!Since this article was published the MCP spec introduced a new elicitation mode, URL-mode elicitation, to handle the user interactions that cannot safely happen inside the MCP client. For more, see Understanding URL-mode elicitation in MCP.!!

Here’s how the elicitation flow works:

- The server detects a missing or ambiguous variable and sends an

elicitation/createrequest. - The client presents the user with the request for information. The client should allow users to review and modify their responses before sending, as well as to reject providing the info or cancel the request.

- The client sends back the response. If the user provides the information, then the necessary context is included in the response.

- The MCP server continues processing using the new information.

Example: Conference catering order

An event planning server is helping book catering for a large conference. A user starts a booking:

While processing, the server realizes it’s missing meal preferences and dietary restrictions. Instead of failing, it sends an elicitation request:

The client shows a UI prompt: “To complete catering booking, select a meal type and optionally add dietary restrictions.”

After the user responds (e.g., buffet, vegetarian), the server continues the booking and finalizes the catering order.

Conclusion

MCP opens up a new world where AI models can securely and intelligently interact with external systems.

- Server-side features: Tools, Resources, and Prompts let you expose powerful capabilities in a structured, reusable way.

- Client-side features: Sampling, Roots, and Elicitation ensure that these capabilities are used efficiently, safely, and with the user always in control.

By understanding how these six features fit together, you can design seamless integrations where models can act as capable assistants while maintaining privacy, security, and a human-in-the-loop approach.

As MCP adoption grows, these features will become the building blocks for AI applications that are not only smart but also trustworthy and scalable.