What is Arcade.dev? An LLM tool calling platform

Large Language Models (LLMs) excel at producing text, but many applications need them to do more: raise GitHub issues, star a repository, or send Twilio messages in real time.

These actions require a pattern called tool calling, where the LLM invokes external APIs, services, or frameworks.

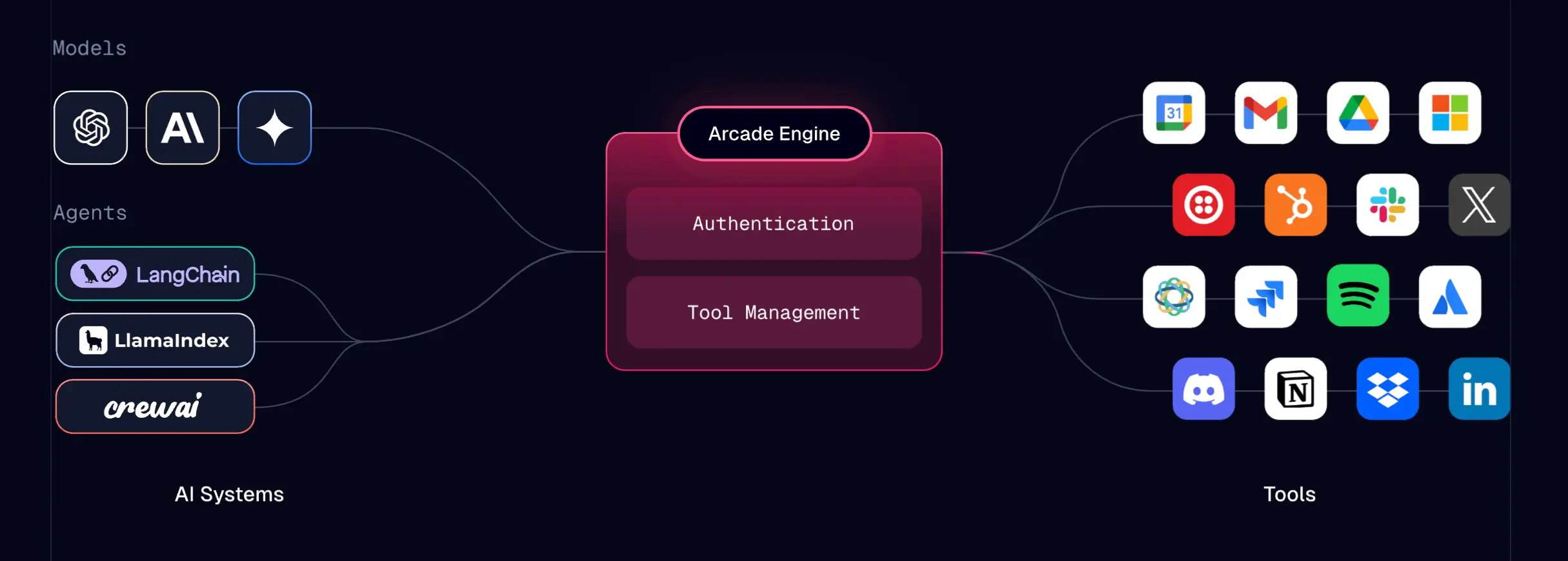

This article examines Arcade.dev, a tool calling platform that simplifies authentication and tool management for application developers.

The OpenAI API spec: enabling model and service interop

Before we can understand Arcade.dev, we need to understand the OpenAI API specification, the de-facto standard across LLMs. You can read a good breakdown of how the spec works here.

Projects like DeepSeek leverage this standard to offer a drop-in alternative to OpenAI. Developers can swap in DeepSeek’s endpoint with minimal code changes, preserving compatibility with tooling, libraries, and frameworks that expect the OpenAI interface.

Similarly, Arcade.dev takes advantage of the same OpenAI API spec.

By pointing your LLM requests at Arcade’s URL and API key instead of OpenAI’s, Arcade can transparently proxy calls to the model and intercept function (tool) calls when they occur.

client = OpenAI(

base_url="https://api.arcade-ai.com", # Arcade's endpoint

api_key=API_KEY

)Your AI agent can invoke external services—like GitHub, Twilio, Slack, or Gmail—behind a unified API layer, keeping your code clean.

Why tool calling matters

Clean codebase

Traditional AI integrations often scatter logic throughout the code. A centralized approach keeps your AI prompts and application logic separate from the details of each service’s API.

Reduced errors

Handling multiple APIs manually can lead to brittle, ad-hoc code. A specialized tool-calling layer centralizes these calls, making maintenance simpler and less error-prone.

Security and unified auth

Managing OAuth tokens or credentials is tedious. A tool-calling framework enforces consistent authentication policies across all integrated services.

Extensibility

As your project grows, you can add or update integrations in a standard format. Your core logic remains the same, even if new features or services are introduced.

Example: Python tool calling

Below is an official snippet from Arcade’s docs showing how an LLM prompt can trigger a tool call to send an email:

import os

from openai import OpenAI

USER_ID = "unique_user_id"

PROMPT = (

"Send an email to jane.doe@example.com with the subject "

"'Meeting Update' and body 'The meeting is rescheduled to 3 PM.'"

)

TOOL_NAME = "Google.SendEmail"

API_KEY = os.environ.get("ARCADE_API_KEY")

client = OpenAI(

base_url="https://api.arcade-ai.com", # Arcade's endpoint

api_key=API_KEY

)

response = client.chat.completions.create(

messages=[

{"role": "user", "content": PROMPT},

],

model="gpt-4o-mini",

user=USER_ID,

tools=[TOOL_NAME],

tool_choice="auto",

)

print(response.choices[0].message.content)When the user prompt indicates “Send an email…,” Arcade sees that the model wants to invoke the Google.SendEmail tool.

It then handles the logic (and OAuth tokens) for sending that email and returns a final text response acknowledging the action.

Real-world scenario: GitHub and Twilio

To see why this is powerful, consider a contrived scenario where you want an LLM agent to:

- Fetch details from a user’s GitHub repos (e.g., open issues, pull requests).

- Create a new GitHub issue if the conversation requires it.

- Send an SMS notification via Twilio to confirm the action.

Arcade.dev toolkits for GitHub and Twilio provide ready-made integrations. You simply specify the relevant tool name—like CreateIssue or SendSms—in the prompt or system instructions, and Arcade takes care of authentication (e.g., GitHub OAuth or Twilio tokens), rate limits, and request formatting.

Your AI logic remains minimal:

1. User: “Check the ‘awesome-project’ repo for open pull requests.”

2. AI (internally): Calls ListPullRequests from the GitHub toolkit.

3. AI: “Found 2 open PRs."

4. User: "Create an issue for that memory leak, then text me a confirmation.”

5. AI (internally): Calls CreateIssue in GitHub. Calls SendSms in Twilio.

6. AI: “Issue created and SMS sent!”

No scattered credentials or custom wrappers needed—Arcade orchestrates everything through a single interface.

Defining a custom tool

If you need something not covered by an existing toolkit (e.g., an internal API), you can define your own tool in Python. Arcade will still handle auth tokens and refresh logic. For instance:

from typing import Annotated

import httpx

from arcade.sdk import ToolContext, tool

from arcade.sdk.auth import X

@tool(

requires_auth=X(

scopes=["tweet.read", "tweet.write", "users.read"],

)

)

async def post_tweet(

context: ToolContext,

tweet_text: Annotated[str, "The text of the tweet you want to post"]

):

"""Post a tweet to X (Twitter)."""

url = "https://api.x.com/2/tweets"

headers = {

"Authorization": f"Bearer {context.authorization.token}",

"Content-Type": "application/json",

}

payload = {"text": tweet_text}

async with httpx.AsyncClient() as client:

response = await client.post(url, headers=headers, json=payload)

response.raise_for_status()Once deployed, the AI can call post_tweet exactly like a built-in integration, enabling a wide range of custom scenarios.

Deploying locally via Docker

Arcade is designed to be run anywhere. To this end, you’ll find examples of packaging your application with Docker as well as installing and running the arcade engine locally via Homebrew on MacOSX or Debian on Linux.

Here’s how you could run a given Arcade project in a Docker container:

git clone https://github.com/ArcadeAI/arcade-ai.git

cd arcade-ai/docker

cp env.example .env

sed -i '' 's/^OPENAI_API_KEY=.*/OPENAI_API_KEY={your OpenAI API key}/' .env

docker compose -p arcade up

curl http://localhost:9099/v1/healthThis gives you a self-hosted environment where you can customize or build new tools, configure logs, and keep data private.

Tool calling platforms help organize LLM applications

The OpenAI API spec has effectively become a universal interface for LLM-based applications, enabling drop-in replacements like DeepSeek and tool-calling platforms like Arcade.dev.

By routing your AI requests through Arcade’s endpoint, you gain the ability to intercept function calls and seamlessly integrate external services—GitHub, Twilio, or any custom-defined tool—without overhauling your codebase.In short, tool calling bridges the gap between text generation and real-world actions.

Whether you’re sending emails, creating GitHub issues, or posting tweets, frameworks like Arcade empower your AI to handle complex tasks while keeping your application code clean, maintainable, and free from credential juggling.