Why most enterprise AI projects fail — and the patterns that actually work

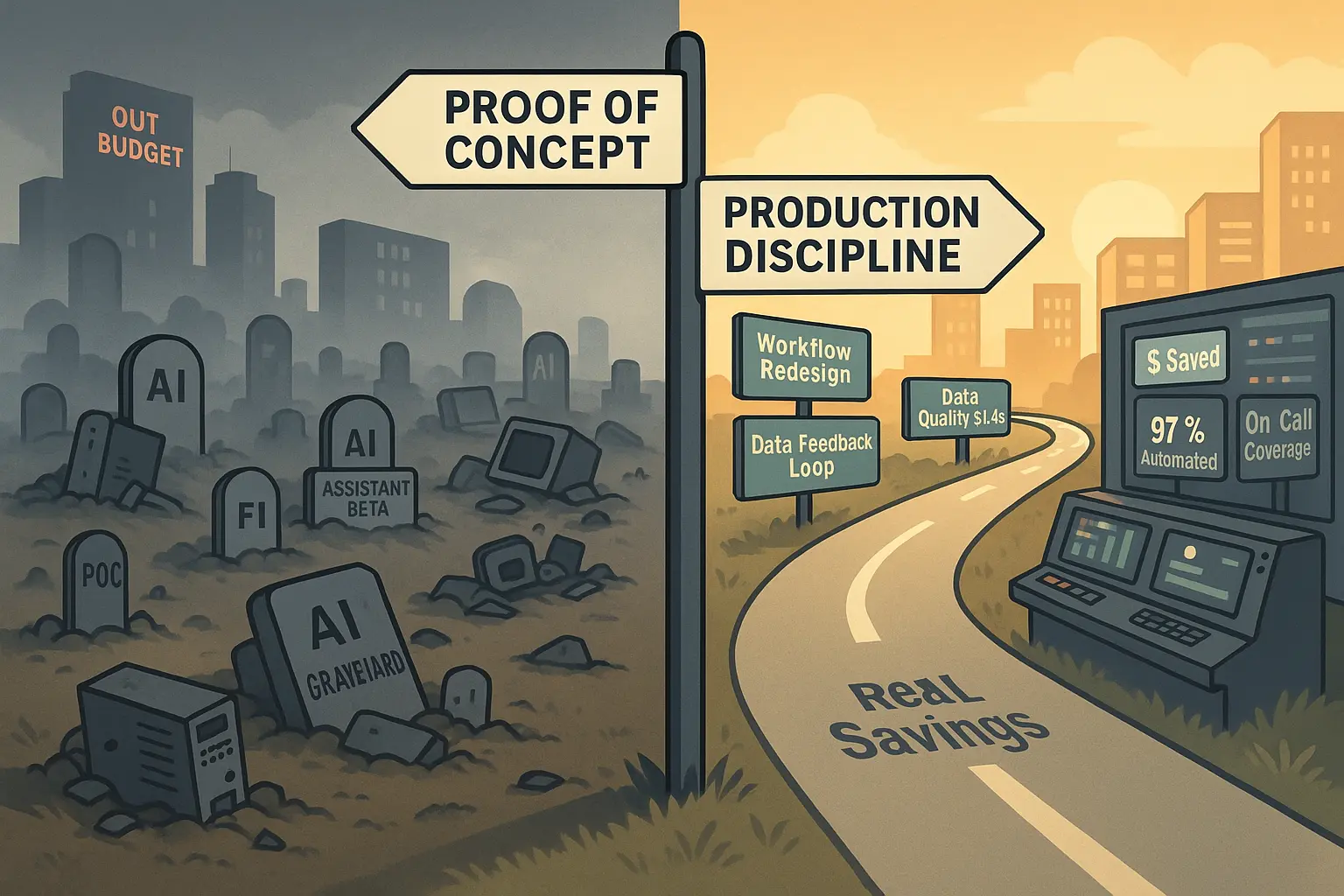

42% of companies abandoned most AI initiatives in 2025, up from just 17% in 2024. After analyzing dozens of enterprise deployments, we found 4 patterns that separate winners from the graveyard of abandoned prototypes.

In 2025, 42% of companies will walk away from their AI bets. But the ones that succeed follow four clear patterns, and they’re the difference between shipping to production and joining the AI graveyard.

The statistics are sobering. According to S&P Global Market Intelligence's 2025 survey of over 1,000 enterprises across North America and Europe, 42% of companies abandoned most of their AI initiatives this year, a dramatic spike from just 17% in 2024. The average organization scrapped 46% of AI proof-of-concepts before they reached production.

This isn't just about technical challenges. RAND Corporation's analysis confirms that over 80% of AI projects fail, which is twice the failure rate of non-AI technology projects. Companies cited cost overruns, data privacy concerns, and security risks as the primary obstacles, according to the S&P findings.

Yet the outliers who succeed aren't just surviving, they're thriving. Lumen Technologies projects $50 million in annual savings from AI tools that save their sales team an average of four hours per week. Air India's AI virtual assistant handles 97% of 4 million+ customer queries with full automation, avoiding millions in support costs. Microsoft reported $500 million in savings from AI deployments in their call centers alone.

The gap between failure and success isn't about model sophistication or computing power. After analyzing dozens of enterprise deployments, four distinct patterns separate the winners from the graveyard of abandoned prototypes.

Why enterprise AI initiatives stall

Pilot paralysis comes first. Organizations launch proof-of-concepts in safe sandboxes but often fail to design a clear path to production. The technology works in isolation, but integration challenges — including secure authentication, compliance workflows, and real-user training — remain unaddressed until executives request the go-live date.

Model fetishism compounds the problem. Engineering teams spend quarters optimizing F1-scores while integration tasks sit in the backlog. When initiatives finally surface for business review, the compliance requirements look insurmountable, and the business case remains theoretical.

Disconnected tribes create organizational friction. Product teams chase features, infrastructure teams harden security, data teams clean pipelines, and compliance officers draft policies — often without shared success metrics or coordinated timelines.

The build-it-and-they-will-come fallacy kills promising projects. A sophisticated model without user buy-in, change management, or front-line champions dies quietly. Contact center summarization engines with 90%+ accuracy scores often gather dust when supervisors lack trust in auto-generated notes and instruct agents to continue typing manually.

Finally, the proliferation of shadow IT creates waste. Cloud billing reports often reveal duplicate vector databases, orphaned GPU clusters, and partially assembled MLOps stacks created by enthusiastic teams without central coordination. These parallel efforts cannibalize data quality and confuse governance.

Pattern 1: Solve a painful business problem before you touch a model

The most reliable predictor of success is starting with business pain, not technical capability. Instead of asking "Which models should we deploy?", winning teams identify process bottlenecks that already cost real money.

Lumen Technologies exemplifies this approach. Their sales teams spent four hours researching customer backgrounds for outreach calls. The company saw this as a $50 million annual opportunity — not a machine learning challenge. Only after quantifying that pain did they design Copilot integrations that compress research time to 15 minutes. The result: measurable time savings that fund expansion to adjacent use cases.

Air India followed a similar path. Facing outdated customer service technology and rising support costs, they identified a specific constraint: their contact center couldn't scale with passenger growth. The airline built AI.g, their generative AI virtual assistant, to handle routine queries in four languages. The system now processes over 4 million queries with 97% full automation, freeing human agents for complex cases.

McKinsey's 2025 AI survey confirms this pattern: organizations reporting "significant" financial returns are twice as likely to have redesigned end-to-end workflows before selecting modeling techniques.

Pattern 2: Fix the data plumbing first

Modern generative AI hasn't eliminated the old maxim that 80% of machine learning work is data preparation. If anything, the stakes are higher.

Bad training data produces inaccurate batch reports that analysts have to debug. Bad retrieval-augmented generation (RAG) systems hallucinate in real-time customer conversations.

Informatica's CDO Insights 2025 survey identifies the top obstacles to AI success: data quality and readiness (43%), lack of technical maturity (43%), and shortage of skills (35%).

Winning programs invert typical spending ratios, earmarking 50-70% of the timeline and budget for data readiness — extraction, normalization, governance metadata, quality dashboards, and retention controls.

Pattern 3: Design for human-AI aollaboration, not full automation

Durable deployments prototype the division of labor between humans and machines early. The intuition is ancient — augmented intelligence beats pure automation — but enterprise workflows still default to binary thinking.

Financial fraud detection provides a quantifiable example. Recent research shows that small batches of analyst corrections, fed back into graph-based models, lift recall by double digits while holding false positives flat.

Microsoft's internal deployment demonstrates the collaborative approach at scale. Their sales team using Copilot achieved 9.4% higher revenue per seller and closed 20% more deals. The key was designing explicit handoffs: Copilot suggests draft responses and summarizes meetings, but sales reps retain control over final customer communications.

The choreography is deliberate: define which actions stay human, expose graceful override paths, and instrument feedback capture as a first-class feature.

Pattern 4: Treat the deployment as a living product

One reason proof-of-concepts die is that nobody volunteers for version 1.1 support. The fourth pattern reframes the entire stack as a product with uptime, drift, and user satisfaction SLAs.

This means standardized observability: event logs, model score distributions, feature null rates, and user feedback hooks that integrate with existing SRE dashboards. When that instrumentation exists, drift detection becomes routine maintenance rather than a crisis response.

Ownership closes the loop. Successful teams assign product managers to model services, write explicit SLOs ("ticket summary accuracy >85% and <5-second latency, 95% of the time"), and budget quarterly research spikes.

The real cost of getting it wrong

When enterprise AI fails, the damage extends beyond wasted budgets. Gartner predicts over 40% of agentic AI projects will be canceled by 2027 due to escalating costs, unclear business value, or inadequate risk controls. The reputational cost compounds: each high-profile stall makes the next budget request harder.

These failures share a common thread: the model rarely breaks, but the invisible infrastructure around it buckles under real-world pressure.

Bringing the patterns together

Enterprise programs that clear the failure statistics behave alike. They begin with unambiguous business pain and draft AI specifications only after stakeholders can articulate the non-AI alternative cost.

They invest disproportionately in trustworthy, observable data pipelines. They choreograph human oversight as a feature, not an emergency valve. And they operate results as living products with on-call rotations, version roadmaps, and success metrics tied to real dollars.

If you already have an initiative in flight, audit it against these four dimensions. Which consumes the most time: model tuning, data cleanup, workflow rollout, or operational hardening? The neglected pillar is probably your hidden constraint on next quarter's success.

If you're proposing a new program, try this thought experiment: Write the press release declaring a financial outcome, not a technological milestone. List the datasets that must be governed to make that claim credible. Sketch the user journey showing when humans stay in control. Then book SRE team time for sprint zero. If the outline feels heavy, good — you just discovered work that normally ambushes teams twelve months later.

The enterprise AI playbook is no longer folklore. Organizations like Lumen, Air India, and hundreds of others have proven that AI can deliver measurable business value when implemented with discipline.

Adopt even one of these patterns, and you tilt the odds away from the silent graveyard of stalled prototypes toward the growing cohort of companies that ship AI to production, measure impact, and fund the next wave with real savings.