Cloudflare: Code Mode Cuts Token Usage by 81%

Cloudflare's Code Mode generates code instead of calling MCP tools directly—cutting token usage by 32% for simple tasks and 81% for complex batch operations.

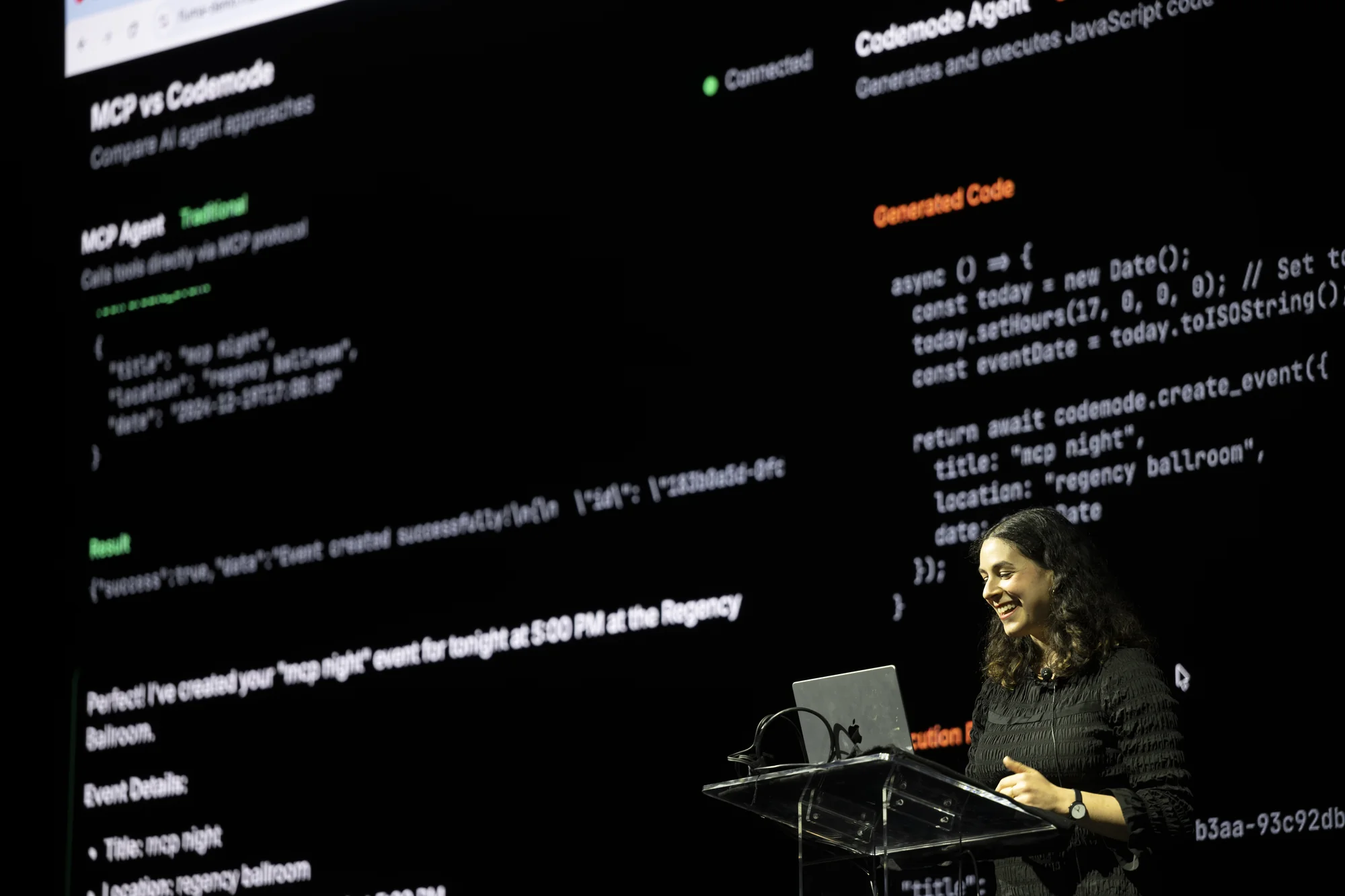

At MCP Night: The Holiday Special, Rita Kozlov demonstrated why the future of MCP might not be tool calling at all—it might be code generation.

This post is part of our MCP Night: The Holiday Special: Holiday Special Recap series. Read the full recap post here.

Rita, VP of AI & Developers at Cloudflare, took the stage and asked, what if we're thinking about MCP tool calling the wrong way?

Her demo introduced "Code Mode"—an alternative approach where AI agents generate and execute code based on MCP server definitions rather than calling tools directly. The results were dramatic.

You can watch Rita's session below or on YouTube:

The Problem with Tool Calling

MCP's standard pattern is straightforward: an agent decides it needs to perform an action, calls a tool, gets a result, and continues. This works well for simple tasks, but Rita pointed to a fundamental limitation.

When you ask an agent to create 31 calendar events—one for each week in January with AI-generated topics—the standard approach means 31 separate tool calls. Each call requires a round trip, each response consumes tokens, and the agent spends its context window managing state across dozens of interactions.

LLMs have gotten remarkably good at tool calling, Rita acknowledged. Otherwise MCP wouldn't be possible.

But she quoted her colleague Kenton Varda with an analogy that stuck: asking an LLM to master tool calling is like asking Shakespeare to take a crash course in Mandarin and then write a play in it. The result would be good—it's Shakespeare—but it wouldn't be his best work.

Code, on the other hand, is what these models were trained on. It's their native language.

Code Mode in Action

Rita built a demo app called "Fluma"—a playful competitor to Luma for event registration. She started with a simple prompt: create an event for MCP Night tonight at 5pm.

The standard MCP tool-calling agent completed the task. So did the Code Mode agent.

But the Code Mode version had an advantage: it generated JavaScript that could call new Date() to determine the actual current date. The tool-calling agent, lacking that capability, created the event on December 19, 2024—a year in the past.

The real test came with the complex prompt: create an event for every week in January 2026 for an AI meetup, all happening at the Cloudflare office at 5pm, on topics relating to MCP, data, and compute.

Both agents raced to complete the task. The tool-calling agent fired off 30+ individual calls. The Code Mode agent generated a loop, iterated over the dates, and made the same API calls through generated code.

Both created 31 events. But the token consumption told the story.

The Numbers

For the simple single-event task, Code Mode used 32% fewer tokens than direct tool calling.

For the complex 31-event task, Code Mode used 81% fewer tokens.

Rita let that number hang in the air. For enterprise deployments where agents might summarize thousands of customer tickets while calling Salesforce, Slack, and a dozen other services, that efficiency gap compounds quickly.

How It Works

Rita walked through the architecture. Both approaches start the same way: a request comes in, hits the agent, and routes through Cloudflare's AI Gateway for model access.

The tool-calling agent then directly invokes the MCP server. Code Mode takes a different path: the agent generates code based on the MCP server's schema, then executes that code in a sandboxed Worker. The Worker makes the same calls to the MCP server, but through generated code that can use loops, conditionals, and runtime functions.

The sandbox is powered by Workers Loader, which Cloudflare recently released. Rita showed the implementation: create an execution ID for tracking, spin up a Worker with the generated code, and execute through a Code Executor binding.

The key insight is performance. When Rita ran the demo, there was no noticeable cold start despite spinning up a fresh sandbox. Workers can scale to billions of these tiny execution environments, each starting instantly. For an agentic future where users expect immediate responses, that instant spin-up is essential.

The Broader Implication

Rita's demo was a statement about where MCP is heading. The most efficient path forward might be hybrid: agents choosing between direct tool invocation for simple tasks and code generation for complex, multi-step operations.

Code Mode is currently in closed beta, but Cloudflare is actively expanding access. For teams building MCP-powered applications at scale, the token economics alone make it worth watching.

As Rita wrapped up, she mentioned Cloudflare is hiring a PM to own their AI and MCP story—specifically looking for someone with a founder mentality. For anyone in the audience who fits that description, the invitation was clear.

Try It Yourself

Cloudflare Workers provides the foundation for Code Mode, with Workers Loader enabling the instant sandbox execution. Request access to the Code Mode beta through Cloudflare's developer platform.

At MCP Night: The Holiday Special, Cloudflare demonstrated that the next evolution in MCP efficiency might not come from better models or smarter prompts—it might come from letting agents write code instead of calling tools.

Read our full MCP Night: The Holiday Special: Holiday Special Recap post here.