AI agent access control: How to manage permissions safely

AI agents are powerful, but without access control, they can create serious risks. Learn how to manage permissions safely with RBAC, OAuth, and Audit Logs.

AI agents can automate workflows, query knowledge bases, and even act as intermediaries between users and sensitive APIs. But with this power comes a critical question: how do we safely manage what these agents are allowed to do?

Without proper permission controls, AI agents risk exposing data, overstepping boundaries, or even becoming a vector for malicious activity. In many ways, we’re at the same stage as early web application development before authentication and RBAC became standard practice. In this article, we’ll explore the problems with unchecked AI agents, best practices for securing them, and how to implement strong controls using Role-Based Access Control (RBAC) and OAuth.

The problem: AI agents without guardrails

Most AI agents operate in dynamic contexts: they fetch information, perform tasks, or integrate with APIs. But giving them broad, ungoverned access introduces several risks:

- Data leakage: Agents trained on or connected to sensitive data sources can inadvertently expose information if not properly restricted. For example, A sales assistant agent with unrestricted database access might accidentally share customer PII (e.g., phone numbers, addresses) in response to a general query like “Show me top customers”. In healthcare, this could mean exposing HIPAA-protected patient records.

- Privilege escalation: Without scoped permissions, an agent could act beyond its intended role, performing actions reserved for administrators. A developer productivity agent that was only supposed to create pull requests could also merge code to production if its permissions are too broad. In cloud environments, an agent meant to provision test resources could unintentionally (or maliciously) spin up production VMs, leading to outages or unexpected costs.

- Compliance violations: Many industries operate under strict rules that require auditability and restricted access. AI agents without RBAC controls can easily break these requirements. In finance, an agent designed to analyze spending patterns could be given unrestricted access to raw financial transactions, violating PCI DSS rules. In legal teams, an AI summarizer might pull in confidential case files that should only be visible to certain partners, creating GDPR or SOX compliance issues.

- Trust erosion: Users lose confidence if agents seem to “know too much” or perform tasks outside their explicit requests. For example, if an HR chatbot is asked a simple benefits question but surfaces also salary information, it can feel invasive. Or, if an internal support agent responds to a query by referencing details from a private Slack conversation, it will make employees wary of using the system altogether.

These risks echo the early days of web applications, before auth, RBAC, and logging matured into must-haves.

A familiar story: Lessons from early web application security

If you look back 15–20 years, web applications faced very similar challenges to what AI agents are facing now:

- Wide-open permissions: Early web apps often shipped without proper authentication layers. Anyone with a URL could access admin panels or sensitive endpoints. Many current AI agent frameworks still assume broad system access. By default, an AI support agent might see the entire ticketing database instead of only the subset relevant to the current user.

- Ad hoc controls: Before standardized RBAC/ABAC models, developers implemented access control in a patchwork way, often embedding

if user.is_adminchecks deep in the application code. Teams today often gate agent actions with hardcoded filters or checks scattered across the codebase, which quickly becomes brittle and inconsistent. - Lack of auditing and visibility: Early systems didn’t log access attempts or actions in a structured way. When something went wrong, teams had little visibility into who accessed what. Without proper logs, it’s hard to answer: “What did this AI agent access on behalf of this user?” This is already a compliance blocker in many industries.

- Compliance lag: Regulations like HIPAA, PCI DSS, and GDPR forced web app developers to take security seriously. Logging, audit trails, and principle-of-least-privilege became not just best practices, but legal requirements. We’re at the stage where regulators haven’t caught up yet, but they will. Enterprises adopting AI agents today need to get ahead of compliance before auditors start asking hard questions about agent access controls.

The solution for web apps was centralized RBAC and federated authentication. The same shift must now happen for AI agents.

Best practices for handling AI agent permissions

To mitigate risks, teams should adopt several foundational practices:

- Secure authentication: Treat AI agents like human users: they must authenticate and refresh credentials. An MCP server connection should issue short-lived tokens instead of static API keys, preventing long-term credential leaks.

- Enforce least privilege: Move beyond a blanket “agent” role. Agents should be granted only the minimum set of permissions necessary to do their job. Instead of giving them “superuser” credentials, configure roles that are narrow, tenant-aware, and time-limited. For example, a customer support bot may only have tickets:read and tickets:comment but it cannot touch billing information because those capabilities aren’t in its role.

- Use OAuth scopes with explicit consent: Let users and admins decide what an agent can do on their behalf using OAuth scopes. When a user or admin approves the agent, they can clearly see which actions are being requested and decide exactly what to allow. This ensures privileges are requested, visible, and revocable. For example, a scheduling agent might be able to read calendars and send email, but unless the user approves the send_email scope, it is restricted to read_calendar access.

- User-to-agent delegation: Agents should inherit the permissions of the specific user they represent. For example, when a sales rep asks an AI agent to fetch pipeline details, the agent should access only what the rep could see in Salesforce, nothing more.

- Rely on short-lived, revocable credentials: Permanent keys or long-lived tokens are dangerous in the context of agents. Instead, issue temporary access tokens that expire quickly, can be refreshed as needed, and are revoked instantly if misused. A token valid for just 10 minutes limits exposure; if something suspicious happens, you can cut off the agent immediately without affecting the rest of the system. Ensure that secrets are never passed in prompts. Attach them server-side and store them in a secure location.

- Log every action for accountability: Log every action an agent takes. Audit entries should capture the initiating user, the acting agent, the resource involved, and the outcome. For example, if an AI finance assistant generates a quarterly report, the audit trail should read: User X → Agent Y → finance:report:export → Org 123 → timestamp. Forwarding these logs to monitoring tools makes it possible to detect anomalies, satisfy compliance, and investigate incidents.

- Add human approval for critical operations: Even well-trained agents shouldn’t have free rein over destructive or high-risk actions. Build in checkpoints where a human must confirm, and in some cases re-authenticate, before the operation proceeds. Always capture the approver’s identity in the audit log. For example, if an AI DevOps bot suggests restarting a production cluster, the restart should only happen once an admin explicitly approves it with MFA.

How to secure AI agents

When you bring AI agents into your application, you should think about them the same way you think about human users: they need to authenticate, be assigned roles and permissions, and have their activity logged. With WorkOS, you can implement this in a few steps.

1. Know who is trying to gain access

The first step is making sure agents have a strong identity in your system. How you authenticate them depends on how they interact:

- User-facing agents (acting on behalf of people): Use WorkOS Connect to authenticate the agent through OAuth. This ensures that the agent only acts within the permissions of the user who authorized it. For example, a support agent helping a logged-in employee will inherit that employee’s access context.

- Agent-to-agent or backend communication: If an agent is calling another service or API directly, use Machine-to-Machine OAuth (M2M). Instead of static API keys, M2M provides short-lived tokens that are scoped to the agent’s purpose, reducing risk and making credential rotation automatic.

- Secure your MCP server: If you are implementing a Model Context Protocol (MCP) server, you can use AuthKit to securely manage access with minimal effort. WorkOS AuthKit supports OAuth 2.1 as a compatible authorization server for MCP apps, based on the latest MCP protocol specification. This enables fine-grained authorization for agentic applications and workflows. If you already have an existing authentication system in your application, you can use Standalone Connect to integrate AuthKit’s OAuth capabilities with your MCP server while preserving your current authentication stack. For more details, check out our MCP docs.

2. Know what they are allowed to do

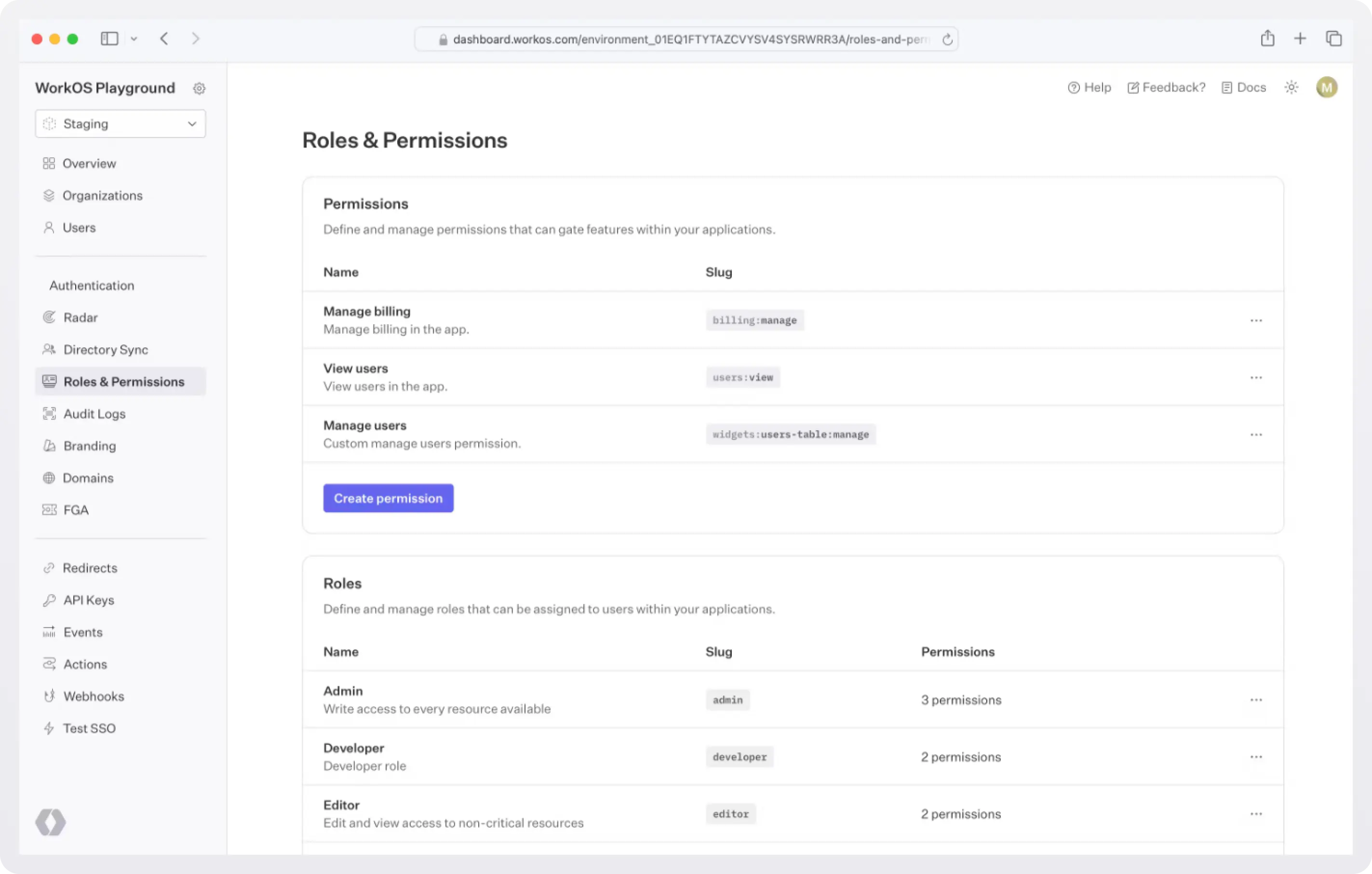

Once an agent is authenticated, you need to decide what it can do. WorkOS RBAC makes this straightforward using roles and permissions.

- Define roles: Create roles like

support_agent,analytics_agent, orhr_assistant. Each role bundles together one or more permissions. - Create granular permissions: Permissions should be specific actions, like

tickets:read,tickets:comment, orreports:generate. - Assign multiple roles: Agents can carry more than one role if needed. For instance, an “operations assistant” could combine the

support_agentandanalytics_agentroles.

WorkOS Roles and Permissions lets you manage this centrally, so you don’t end up with hardcoded checks in your codebase. Instead, your application enforces permissions dynamically, and you can adjust roles without redeploying.

After you configure roles and permissions in the dashboard, every time a user/agent logs in, they will get a JWT with their corresponding roles.

Next.js example:

If the permissions include billing:manage the app can display the billing info.

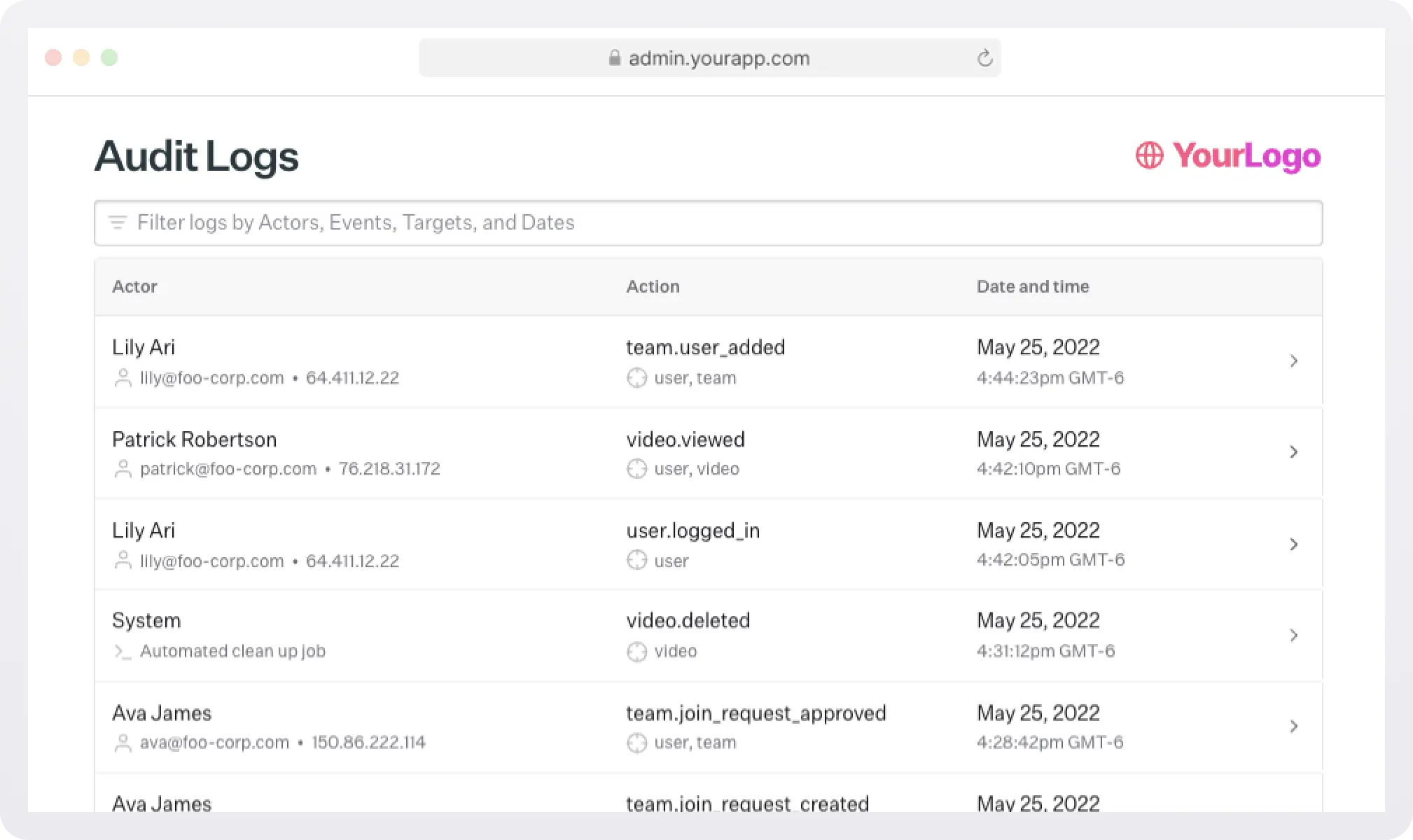

3. Know what they did

Finally, every action an AI agent takes should be logged just like a human user. With WorkOS Audit Logs, you can:

- Capture every action: Log which agent acted, on behalf of which user (if applicable), what resource was touched, and when.

- Support compliance and security: Logs provide the audit trail you need for SOC 2, HIPAA, or GDPR, and also make it easier to detect unusual behavior.

- Export to monitoring tools: Stream logs to your SIEM or analytics system for real-time alerting and analysis.

Example audit entry:

This makes it clear who did what, when, and with what authority, the foundation of secure agent operations.

Putting it all together

By combining these three layers (authentication, role-based permissions, and audit logs) you ensure AI agents in your app are treated with the same rigor as human users:

- They prove their identity (WorkOS Connect, M2M, MCP).

- They operate within clearly defined limits (WorkOS RBAC).

- Their actions are fully traceable (WorkOS Audit Logs).

This layered approach closes the gaps that make AI agents risky, while giving you the flexibility to adopt them in production systems with confidence.

Conclusion

AI agents are too powerful to leave unchecked. Without strict permission management, they risk exposing sensitive data, violating compliance, or eroding user trust. The solution lies in applying the same security patterns we already use for humans: authentication, least privilege, and role-based access control.

WorkOS makes it simple to implement these controls. By adopting these practices, you ensure that your AI agents remain not only useful but also safe and compliant.